Hi Francois,

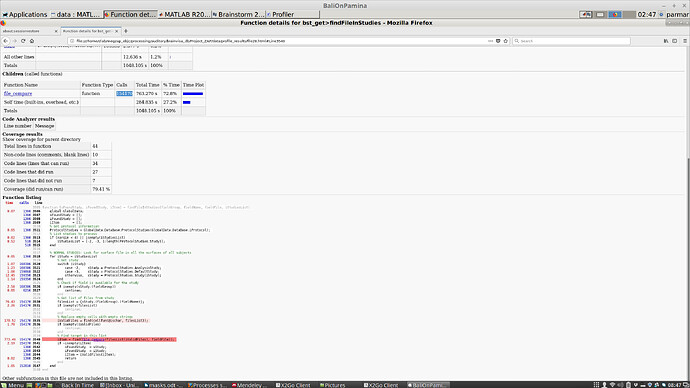

Thanks for the advice. Memory doesn't seem to be the problem, and this process seems to run on one core at a time. I ran a threshold-based artifact rejection and averaging step on a single condition of 450 short epochs (350ms) with the profiler on, and it took about 20 minutes. The offending step seems to be line 3540 in function findFileInStudies, which is

% Find target in this list

iItem = find(file_compare(filesList(iValidFiles), fieldFile));

I believe what is happening is that each time it's looking for particular files it's having to compare with all of the files in my database (I have many!). It made 154170 calls to this function (see screen cap) to work on the 450 epochs.

A more knowledgeable friend asks if this might be a good case for a hash table, which would scale much more readily to larger databases and keep the performance levels functional.

Is this a modification you might consider in the near future? If that's not feasible, what would you suggest to process this dataset - maybe a custom function to read in the files directly, abs(max) of all channels, mark those above a threshold, average and then reattach finished file to db? Or should I make lots of mini databases with smaller numbers of files? (seems like a pain)

Emily