So I am trying to understand concatenate a little better. I tried to calculate coherence for multiple epochs as single files and averaged all files and then I tried the same with concatenate.

I thought I understood how both functions work but I was very surprised when I was looking at the results and saw that the differences between both result files were extremely large. Can someone explain to me why calculating the coherence using concatenate instead of average leads to such varying results and why concatenate is probably the prefered option?

Also a side question, that might be related, When I am calculating my coherence using concatenate with 50 epochs using the "process 2" method, the automatic filename in brainstorm is "icohere(2.0Hz, 179win): Pinch (#1)". I feel very confident that all 50 epochs are on there but naming makes me a little nervous.

First of all, make sure you update Brainstorm and use the updated coherence process (with option imaginary coherence 2019).

Can someone explain to me why calculating the coherence using concatenate instead of average leads to such varying results and why concatenate is probably the prefered option?

Ideally, the coherence should be evaluated only from long continuous signals.

Many people are trying to estimate connectivity from short epochs around an event of interest.

If these epochs are long enough, it is better to estimate the coherence on each segment individually and average the coherence values. However, in the cases where the epochs are too short for the evaluating the coherence at the frequencies of interest, this is not possible.

The concatenation option proposes a patch for this case: all the short epochs are concatenated together to create a long signal (which contains a lot of discontinuities, inducing a lot of noise in the cross-spectra), which is then split windows longer than the individual epochs (hence each window has at least one discontinuity between two epochs) to estimate the coherence.

The results might be quite different, indeed.

@Sylvain @hossein27en @John_Mosher Do you have any recommendation to add?

I feel very confident that all 50 epochs are on there but naming makes me a little nervous.

Only the comment of the first file is reported in the final file, you can ignore the (#1) if this was the problem.

You should rely on the number of windows: 179 windows with a 50% overlap should match the duration of all the 50 trials concatenated.

Thank you Francois!!!!! I understand

Hello Francois,

sorry after running the data we are still a little confused.

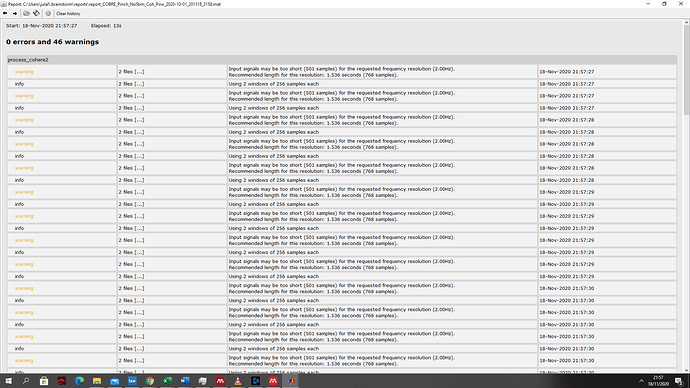

So when I was running the data as average I got the following warnings. Based on your comment on the time windows I assumed that concatenate should be the appropriate choice

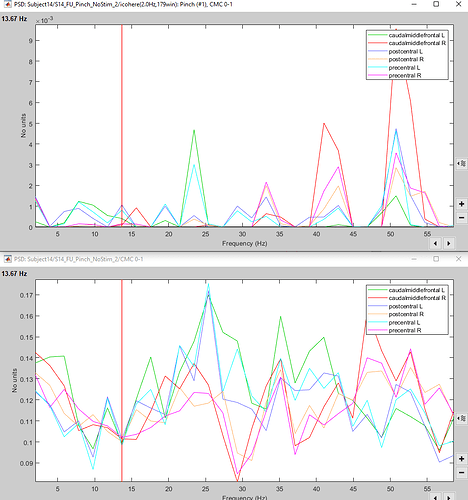

But comparing the two results it feels like the averaged data look a little more complete. The next figure present first the results for concatenate then for average.

What is concerning for concatenate is not only the amount of 0 values but also the considerable differences in amplitude. Most value are arround 0,001 while for average it is 0,1.

I know that source coherence might be different than channel coherence but from channel coherence data I would expect results to be more in the range of 0.1 which is similar to the results we got for averaging. This makes me also concerened that maybe averaging would have been the better solution.

The question is pretty simple, just based on this more detailed view of the data and the warning. Would averaging or concatenate be more appropriate.

Thanks!

I can easily reproduce the reports you're providing here (concatenate vs average). Unfortunately, estimating coherence from short trials is complicated:

- concatenated: signal full of discontinuities, and therefore not adapted for this kind of analysis

- averaged: averaging many poorly-estimated coherence values

@eflorin @Sylvain @hossein27en @leahy @pantazis @Marc.Lalancette

Has this concatenation approach ever been published anywhere?

This seems to give very low imaginary coherence values, and very different from the averaging approach. Do we have any formal proof that this is to be recommended?

Previous posts discussed this topic, with no clear references either:

While testing this, I found some issues in the icohere2019 computation:

Re: the concatenation, the response from @eflorin:

I had suggested the concatenation, but with longer trials in mind or continuous recordings where one needs to remove bad segments. In this case it works. But for short trials it probably does not. I believe in fieldtrip this issue is dealt with multitapers – maybe this is an option to consider?

@oschranch Coherence on short epochs is probably not a good idea. This metric is adapted to studying long segments of continuous recordings, where the brain activity of interested is sustained for at least a few seconds.

@hossein27en This should be documented in the future Connectivity tutorial:

https://neuroimage.usc.edu/brainstorm/Tutorials/Connectivity

The concatenate feature was implemented in the previous functions.

The new Amplitude Envelope function does not have this feature. And I also believe it should not be used, because of problems we previously discussed like introducing new frequencies.

I believe the "icohere2019" is working properly at this time after we dropped the p-value thing.