Hello everyone,

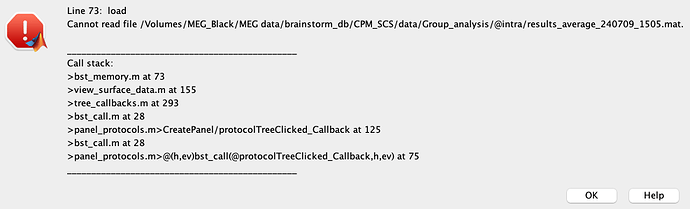

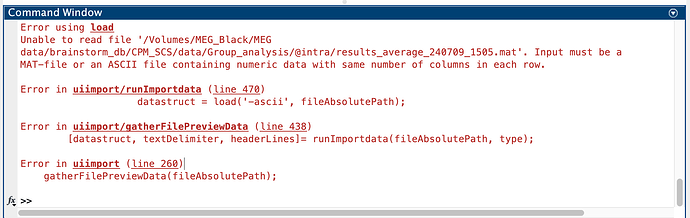

I want to average source maps over subjects (MEG data). However, after averaging, I end up with a file that causes problems. When I try to open the averaged file, I receive the message 'Cannot read file':

Also when I attempt to open the .mat file via finder, I encounter a load error:

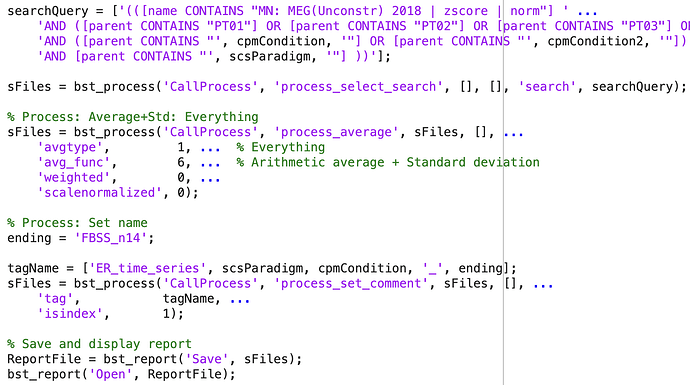

I have tried averaging the source maps using a pipeline (see code below), and I have also tried dragging the files to the files-to-process box and then selecting the average process.

I am using MATLAB R2022a and have updated Brainstorm today. I am working on a MacBook. Restarting Brainstorm and/or MATLAB does not resolve the issue. In the past, I have successfully averaged source maps using the same method. I can still open old averaged source maps, I can also open the files that I want to average. I have tried another brainstorm function that results in a file, specifically extracting time scout series from a source map, and this works resulting in a file that I can open without any issues.

I hope you can help me out with this issue. If you need any additional information, let me know.

Kind regards,

Laurien

It seems to be an issue of a corrupted file.

Could you share the culprit file to investigate deeper?

This is the file I get when I averaged (mean + standard deviation) source maps. The file is very large 4.87 GB, so I uploaded it in a pcloud transfer:

https://transfer.pcloud.com/download.html?code=5ZVoIT0ZHGxg1DVsBrFZifyk7ZlCWxUCiAVUVh5JmmM9AIdFFrbowy

Indeed, the share file is corrupted.

Did you notice any error at the creation of the file?

Is there enough space in the disk to write such a big file?

Have you had this issue with other large (>2GB) files?

No I didn't note any error. The only warning I got from brainstorm is that it is not possible to create a common channel file for the average, but I got this warning before and then it did not result in corrupted files.

There should be enough space at the external disk that I use (0.5 TB left). And before I did not have any issues with creating files that were this large.

Just tried to compute an average of source maps in a protocol that is on another external disk and this also results in a (corrupted) file that I cannot load.

Could it be that file system of you external disk is FAT32 (which has file size limit of 4GB)?

Could you try to compute the average without standard deviation, to check if the error is due to the file size?

The file system of the disk is exFAT. When I compute the average without standard deviation I get the same error (file size is 2.56 GB).

In the past I have succesfully computed similar average + standard deviation files with files of >4.8 GB.

I have tried if updating my matlab version resolves the issue, so now I use Matlab R2024a, however still the same error.

In the shared pipeline, can you skip the step of Set name and check if you can read the average before changing its name?

That also doesn't solve the problem unfortunately.

An update on this issue: I am able to run the pipeline on a windows PC. I am still not able to run the same pipeline on my MacBook. So it seems that the problem may be related to macOS or to my specific MacBook.

In any case, the issue is resolved for me for now. Thankyou Raymundo for thinking along.