Granger causality

Authors: Hossein Shahabi and Raymundo Cassani

This tutorial extends the information provided in the connectivity tutorial regarding the formulation of time- and frequency-domain Granger causality. Moreover, an numeric example based on simulated signals is provided to verify the results obtained with GC in time and frequency domain.

Contents

Mathematical background

Wiener-Granger causality, better know as Granger causality (GC) is a method of functional connectivity, while conceptually developed by Norbert Wiener in 1956, it was implemented in terms of linear autoregressive modelling by Clive Granger in the 1960s (Granger, 1969), but later refined by John Geweke in the form that is used today (Geweke, 1984). Granger causality was originally formulated in economics, but has caught the attention of the neuroscience community in recent years. Before this, neuroscience traditionally relied on lesions and applying stimuli on a part of the nervous system to study it’s effect on another part. However, Granger causality made it possible to estimate the statistical influence without requiring direct intervention (Bressler and Seth, 2011).

By definition, Granger causality is a measure of linear dependence, which tests whether the variance of error for a linear autoregressive model estimation (AR model) of a signal  can be reduced when adding a linear model estimation of a second signal

can be reduced when adding a linear model estimation of a second signal  . If this is true, signal

. If this is true, signal  has a Granger causal effect on the first signal

has a Granger causal effect on the first signal  , i.e., independent information of the past of

, i.e., independent information of the past of  improves the prediction of

improves the prediction of  above and beyond the information contained in the past of

above and beyond the information contained in the past of  alone. The term independent is emphasized because it creates some interesting properties for GC, such as that it is invariant under rescaling of the signals, as well as the addition of a multiple of

alone. The term independent is emphasized because it creates some interesting properties for GC, such as that it is invariant under rescaling of the signals, as well as the addition of a multiple of  to

to  . The measure of Granger Causality is nonnegative, and zero when there is no Granger causality (Geweke, 1984).

. The measure of Granger Causality is nonnegative, and zero when there is no Granger causality (Geweke, 1984).

The main advantage of Granger causality is that it is an directed measure, in that it can dissociate between  versus

versus  . It is important to note however that though the directionality of Granger causality is a step closer towards measuring effective connectivity compared to non-directed measures, it should still not be confused with “true causality”. Effective connectivity estimates the effective mechanism generating the observed data (model based approach), whereas GC is a measure of causal effect based on prediction, i.e., how well the model is improved when taking variables into account that are interacting (data-driven approach) (Barrett and Barnett, 2013).

. It is important to note however that though the directionality of Granger causality is a step closer towards measuring effective connectivity compared to non-directed measures, it should still not be confused with “true causality”. Effective connectivity estimates the effective mechanism generating the observed data (model based approach), whereas GC is a measure of causal effect based on prediction, i.e., how well the model is improved when taking variables into account that are interacting (data-driven approach) (Barrett and Barnett, 2013).

The difference with causality is best illustrated when there are more variables interacting in a system than those taken into account in the model. For example:

A variable  is causing both

is causing both  and

and  , but with a smaller delay for

, but with a smaller delay for  than for

than for

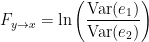

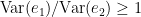

In its simplest (unconditional) form, Granger causality measure between  and

and  would show a non-zero GC for

would show a non-zero GC for  -->

-->  , even though

, even though  is not truly causing

is not truly causing  (Bressler and Seth, 2011).

(Bressler and Seth, 2011).

The spurious causalities like in the example above, may be removed by using the conditional GC approach in which the common dependencies (available in the data) are conditioning out. So, the conditional GC measure between  and

and  considering

considering  , is written as

, is written as  -->

-->  |

| (where '|' means 'conditioned on'), and it would be close to zero. (Barnett and Seth 2014).

(where '|' means 'conditioned on'), and it would be close to zero. (Barnett and Seth 2014).

IMAGE

GC in time domain

Even though GC has been extended for nonlinear, multivariate and time-varying conditions, in this tutorial we will stick to the basic case, which is a linear and stationary model defined in both the time and spectral domain. Let consider the same example as above:

A variable  is causing both

is causing both  and

and  , but with a smaller delay for

, but with a smaller delay for  than for

than for

Unconditional GC

To compute the unconditional GC from  to

to  , the signal

, the signal  can be modelled using a linear AR model in the following two ways:

can be modelled using a linear AR model in the following two ways:

![\begin{eqnarray*}

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)] +e_1 \\

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)+B_{k}y(t-k)] +e_2

\end{eqnarray*} \begin{eqnarray*}

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)] +e_1 \\

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)+B_{k}y(t-k)] +e_2

\end{eqnarray*}](/brainstorm/GrangerCausality?action=AttachFile&do=get&target=latex_ff96bd67f304dfa4455accf112cb1620facee597_p1.png)

Where  is the model order, and represents the amount of past information that will be included in the prediction of the future sample. In these two equations, the first model for

is the model order, and represents the amount of past information that will be included in the prediction of the future sample. In these two equations, the first model for  uses the past (and present) of only itself whereas the second includes the past (and present) of a second signal

uses the past (and present) of only itself whereas the second includes the past (and present) of a second signal  . Note that when only past measures of signals are taken into account (

. Note that when only past measures of signals are taken into account ( ), the model ignores simultaneous connectivity, which makes it less susceptible to volume conduction (Cohen, 2014). Then, according to the original formulation of GC, the measure of GC from

), the model ignores simultaneous connectivity, which makes it less susceptible to volume conduction (Cohen, 2014). Then, according to the original formulation of GC, the measure of GC from  to

to  is defined as:

is defined as:

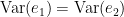

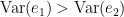

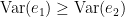

Which is 0 for  and a non-negative value for

and a non-negative value for  . Note that

. Note that  always holds, as the model can only improve when adding new information.

always holds, as the model can only improve when adding new information.

Conditional GC

Analogous to the unconditional case, the signal  can be modelled using a linear AR model in the following two ways:

can be modelled using a linear AR model in the following two ways:

![\begin{eqnarray*}

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)+C_{k}z(t-k)] +e_1 \\

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)+B_{k}y(t-k)+C_{k}z(t-k)] +e_2

\end{eqnarray*} \begin{eqnarray*}

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)+C_{k}z(t-k)] +e_1 \\

x(t) & = & \sum_{k=1}^{p} [A_{k}x(t-k)+B_{k}y(t-k)+C_{k}z(t-k)] +e_2

\end{eqnarray*}](/brainstorm/GrangerCausality?action=AttachFile&do=get&target=latex_3e6984bc4f12dd4ebff82ef7b71d4432ef5d43f9_p1.png)

in this case, now the signal  is being considered in the model, as a way to reduce spurious causalities. Once again, the ration

is being considered in the model, as a way to reduce spurious causalities. Once again, the ration  always holds, as the model can only improve when adding new information. Then, Then, the conditional of GC, the measure of GC from

always holds, as the model can only improve when adding new information. Then, Then, the conditional of GC, the measure of GC from  to

to  considering

considering  is defined as:

is defined as:

Note that if  does not causes

does not causes  nor

nor  , the unconditional and conditional GC measure is the same.

, the unconditional and conditional GC measure is the same.

GC in frequency domain

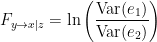

An important improvement by Geweke was to show that under fairly general conditions,  can be decomposed by frequency.

can be decomposed by frequency.

Unconditional spectral GC

If the two AR models in time domain are specified as:

![\begin{eqnarray*}

x(t) &=\sum_{k=1}^{p} [A_{k_{xx}}x(t-k) + A_{k_{xy}}y(t-k)] +\sigma_{xy} \\

y(t) &=\sum_{k=1}^{p} [A_{k_{yy}}y(t-k) + A_{k_{yx}}y(t-k)] +\sigma_{yx}

\end{eqnarray*} \begin{eqnarray*}

x(t) &=\sum_{k=1}^{p} [A_{k_{xx}}x(t-k) + A_{k_{xy}}y(t-k)] +\sigma_{xy} \\

y(t) &=\sum_{k=1}^{p} [A_{k_{yy}}y(t-k) + A_{k_{yx}}y(t-k)] +\sigma_{yx}

\end{eqnarray*}](/brainstorm/GrangerCausality?action=AttachFile&do=get&target=latex_7053c1d2e3c64970fd2e3c0b13042ca58f9fcdc7_p1.png)

In each equation the reduced model can be defined when each signal is an AR model of only its own past, with error terms  and

and  . Then we can defined the variance-covariance matrix of the whole system as:

. Then we can defined the variance-covariance matrix of the whole system as:

![\begin{eqnarray*}

\Sigma =

\left[\begin{array}{cc}

\Sigma_{xx} & \Sigma_{xy} \\

\Sigma_{yx} & \Sigma_{yy}

\end{array}\right] \\

\end{eqnarray*} \begin{eqnarray*}

\Sigma =

\left[\begin{array}{cc}

\Sigma_{xx} & \Sigma_{xy} \\

\Sigma_{yx} & \Sigma_{yy}

\end{array}\right] \\

\end{eqnarray*}](/brainstorm/GrangerCausality?action=AttachFile&do=get&target=latex_9b11baf00d9a911511636a10c2e8757b493a8102_p1.png)

where  , etc. Applying the the Fourier transform to these equations, they can be expressed as:

, etc. Applying the the Fourier transform to these equations, they can be expressed as:

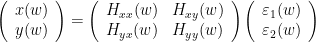

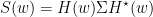

Rewriting this as

Where  is the transfer matrix. The spectral matrix is then defined as:

is the transfer matrix. The spectral matrix is then defined as:

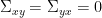

Finally, assuming independence of the signals  and

and  , and

, and  , we can define the spectral GC as:

, we can define the spectral GC as:

Note that the ability to reformulate the equations in spectral domain also makes it very easy to generalize to a multivariate GC. That is why all multivariate measures of GC are defined in spectral domain. Alternative measures derived from these multivariate autoregressive estimates are Partial Directed Coherence (PDC) and Directed Transfer Function (DTF).

Conditional spectral GC

The conditional case is less straightforward than the unconditional. The interested reader is referred to (Barrett and Barnett, 2013) and (Barrett and seth, 2014).

A few noteworthy practical issues about GC

Temporal resolution: the high time resolution offered by MEG, EEG, and intracranial EEG allows for a very powerful application of GC and also offers the important advantage of spectral analysis.

Stationarity: the GC methods described so far are all based on AR models, and therefore assume stationarity of the signal (constant auto-correlation over time). However neuroscience data, especially task based data such as event-related potentials (ERPs) are mostly non-stationary. There are two possible approaches to solve this problem. The first is to apply methods such as differencing, filtering, and smoothing to make the data stationary (see recommendation for time domain GC), although dynamical changes in the connectivity profile cannot be detected with this approach. The second approach is to turn to versions of GC that have been adapted for non-stationary data, either by using a non-parametric estimation of GC, or through measures of time-varying GC, which estimate dynamic parameters with adaptive or short-time window methods (Bressler and Seth, 2011).

Number of variables: Granger causality is very time-consuming in the multivariate case for many variables (

) where

) where  represents the number of variables). Since each connection pair results in two values, there will also be a large number of statistical comparisons that need to be controlled for. When performing GC in the spectral domain, this number increases even more as statistical tests have to be performed per frequency. Therefore it is usually recommended to select a limited number of ROIs or electrodes based on some hypothesis found in previous literature, or on some initial processing with a more simple and less computationally heavy measure of connectivity.

represents the number of variables). Since each connection pair results in two values, there will also be a large number of statistical comparisons that need to be controlled for. When performing GC in the spectral domain, this number increases even more as statistical tests have to be performed per frequency. Therefore it is usually recommended to select a limited number of ROIs or electrodes based on some hypothesis found in previous literature, or on some initial processing with a more simple and less computationally heavy measure of connectivity. Pre-processing: The influence of pre-processing steps such as filtering and smoothing on GC estimates is a crucial issue. Studies have generally suggested to limit filtering only for artifact removal or to improve the stationarity of the data, but cautioned against band-pass filtering to isolate causal influence within a specific frequency band (Bressler and Seth, 2011).

Volume Conduction: Granger causality can be performed both in the sensor domain or in the source domain. Though spectral domain GC generally does not incorporate present values of the signals in the model, it is still not immune from spurious connectivity measures due to volume conduction (for a discussion see (Steen et al., 2016)). Therefore it is recommended to reduce the problem of signal mixing using additional processing steps such as performing source localization, and performing connectivity analysis in source domain.

Data length: because of the extent of parameters that need to be estimated, the number of data points should be sufficient for good fit of the model. This is especially true for windowing approaches, where data is cut into smaller epochs. A rule of thumb is that the number of estimated parameters should be at least (~10) several times smaller than the number of data points.

In the next sections we present a hands-on example with simulated signals where Granger causality (in time and frequency domain) can be used. Since the GC method is based on AR modelling of signals, the simulated signals are generated with a AR model, thus providing a fair ground truth to validate the GC results.

Simulate signals

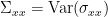

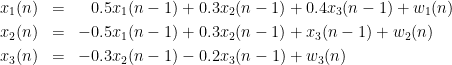

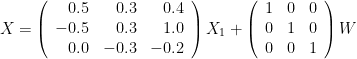

In this example, we generate a dataset for a 3-variate AR model (example 2 in the study of (Baccala and Sameshima, 2001)). These observed time series are assumed to be generated by the equations:

Where  are zero-mean uncorrelated white processes with identical variances. Since the model order in this example is one, we can rewrite the equations in matrix form as such:

are zero-mean uncorrelated white processes with identical variances. Since the model order in this example is one, we can rewrite the equations in matrix form as such:

To do simulate this in Brainstorm, we use the following steps:

Select the menu File > Create new protocol > "TutorialGC" and select the options:

- "Yes, use protocol's default anatomy"

- "No, use one channel file per acquisition run (MEG/EEG)".

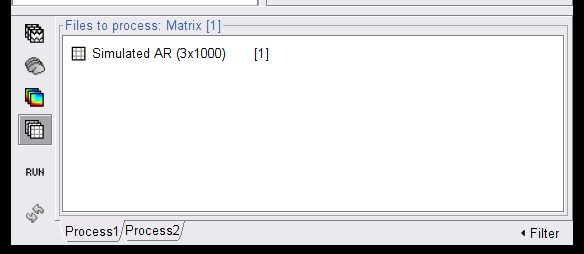

In the Process1 tab, leave the file list empty and click on the button [Run] (

) to open the Pipeline editor.

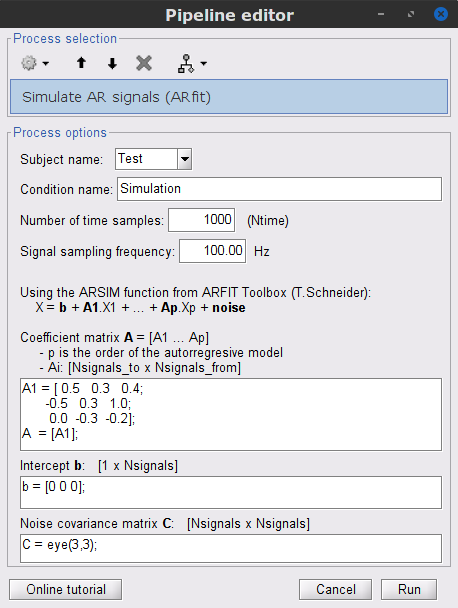

) to open the Pipeline editor. Select the process Simulate » Simulate AR signals (ARfit), and all the parameters as follows:

% Coefficient matrix A: A1 = [ 0.5 0.3 0.4; -0.5 0.3 1.0; 0.0 -0.3 -0.2]; A = [A1]; % Intercept b: b = [0 0 0]; % Noise covariance matrix C: C = eye(3,3);

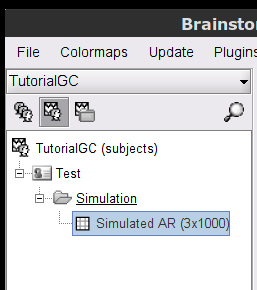

Which is a 10 second simulation with 100Hz sampling rate. The process will give you a data file presented in Brainstorm as:

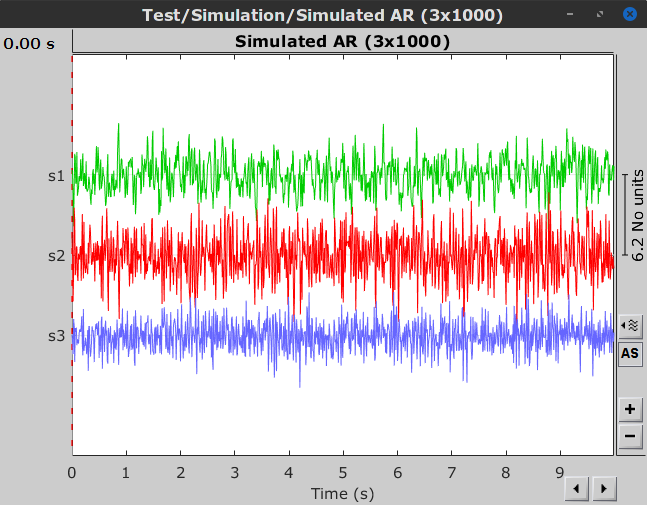

When displaying the data as time-series:

Process options

Subject name: Specify in which subject the simulated file is saved.

Condition name: Specify the folder in which the file is saved (default: "Simulation").

Number of time samples: number of data samples per simulation.

Signal sampling frequency: Sampling frequency of the simulated signal in Hz.

Coefficient matrix A: coefficient matrix as defined in the matrices in equations above. There are p (model order) number of A matrices, each the size [Nsignals_to, Nsignals_from]. For orders p>1, these coefficient matrices are then combined as: A = [A1, A2, ..., Ap];.

Intercept b: constant offset for each variable, as defined by a matrix with size [1, Nsignals].

Noise covariance matrix C:} covariance matrix for the error (noise) term. Since we are modelling an uncorrelated white process with identical variance, this is equivalent to an identity matrix with size [Nsignals, Nsignals].

Bivariate (temporal) Granger Causality NxN

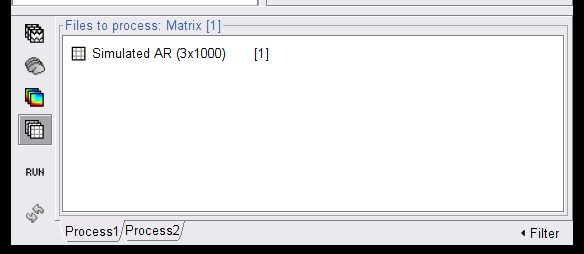

To compute Granger causality, place the simulated data in the Process1 tab.

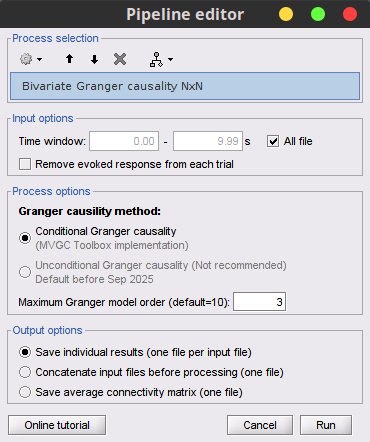

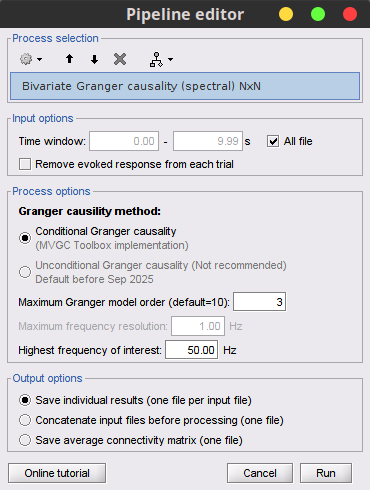

Click on [Run] to open the Pipeline editor, and select Connectivity » Bivariate Granger causality NxN.

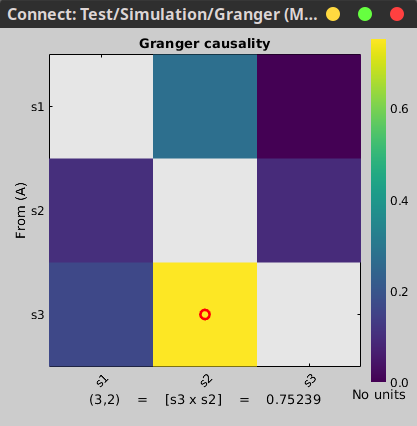

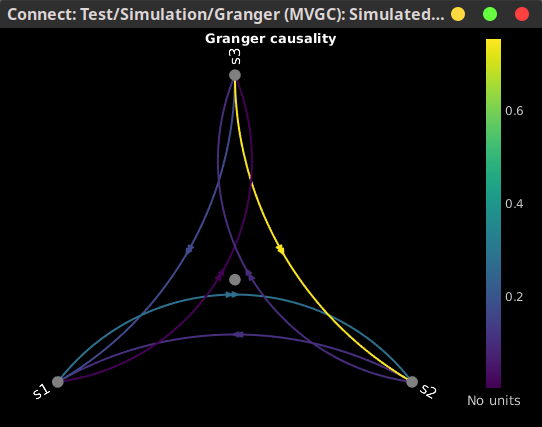

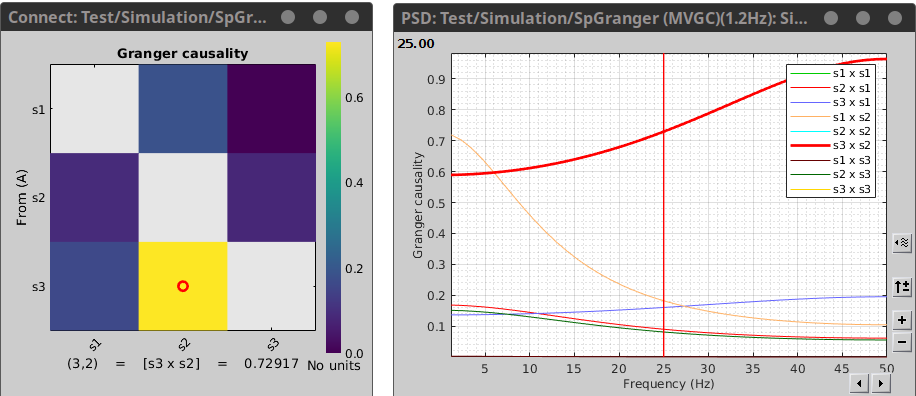

The connectivity profile calculated is saved in the file Granger: full. Righ-click on it, and select Display as image [NxN].

In this image, an asymmetrical matrix of GC estimates is shown, with non-negative values. This map is shows the conditional GC for our multivariate system. As can be seen, there is a strong propagation of activity going from signal 3 to signal 2, as is the case in the originally described model. The next strong connections are from signal 1 to signal 2, and from signal 3 to signal 1. Note that connections from signal 2 to signal 1, and from signal 2 to signal 3 show similar values, as they have the same coefficient value in the simulation model though with negative signs. Note that the relative strengths of connectivity in the results are reserved, but the value itself is a ratio of the residual errors of restricted model and the full as described in the introduction, and should not be confused with the actual coefficient values.You also have the option of showing the results as a graph with right-click on the Granger file then Display as graph [NxN]. See the connectivity graph tutorial for a detailed explanation of the options of this visualization.

Process options

Time window: Segment of the signal used for the connectivity analysis. Check All file.

Sensor types or names: Leave it empty.

Include bad channels: Check it.

Removing evoked response: Check this box to remove the averaged evoked. It is also recommended by some as it meets the zero-mean stationarity requirement (improves stationarity of the system). However, the problem with this approach is that it does not account for trial-to-trial variability. For a discussion see (Wang et al., 2008).

GC method: Select if conditional or unconditional GC will be used.

Model order: Order of the model, see recommendations in the next section. While our simulated signals were created with a model of 1, here we used as model order of 3 for a decent connectivity result.

Save individual results (one file per input file): option to save GC estimates on several files separately.

Concatenate input files before processing (one file): option to save GC estimates on several files as one concatenated matrix.

On the hard drive

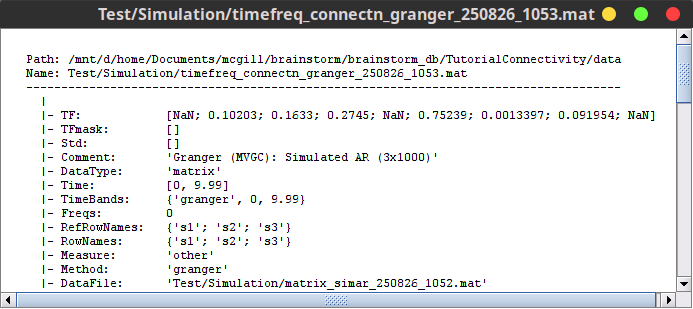

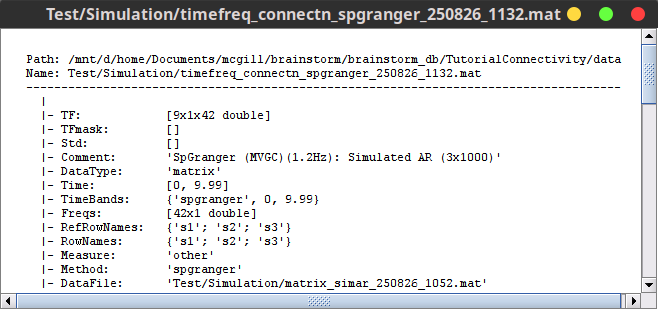

The output of the Bivariate Granger Causality NxN is a NxN matrix saved as TF domain, and it represent the coupling strength between variables. You can see these contents with right-click on the Granger file then File » View file contents.

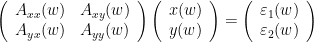

Recommendations

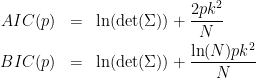

The model order used for GC is crucial, as too low orders may lack the necessary details, while too big orders tend to create spurious values of connectivity. The most common criteria used to define the order of the model are the Akaike’s information criterion (AIC), the Bayesian-Schwartz’s criterion (BIC), and the Hannan-Quinn criterion. As mentioned before, a model order should be selected with care. In electrophysiological data, a model order should also make some biological sense. For example in EEG data with 2 kHz sampling rate, an order of 5 would correspond to a 2.5 ms of past information incorporated to train your model, which is probably too little past information to make a decent judgement. Note that to take an arbitrary high model order is also not recommended, as it will increase the type I error. We will refrain from recommending a rule of thumb model order, but instead advise to use the information criteria such as AIC or BIC to compare model fits:

Where  is the noise covariance matrix,

is the noise covariance matrix,  model order,

model order,  number of variables, and

number of variables, and  is the number of data samples.

is the number of data samples.

In the case of conditional GC, computed with the MVGC Toolbox, the model order that is used is computed using AIC, which is in range between 1 and the maximum model order provided in the GUI.

On a similar note, the sampling rate of the data is also not an easy choice to make. Even though too low sampling rate will eliminate possible dynamics of the data, if the sampling rate is too high the model will be computationally very expensive, as a very high model order will be required. For most MEG / EEG data, a sampling rate between 200 and 1000 Hz will probably suffice (Cohen, 2014).

As mentioned in the introduction, the current model is based on the assumption of stationarity. Therefore it is important to first check if the time series we are using are stationary with statistical tests such as the KPSS test or the Dickey-Fuller (unit root) test. If the data is shown to be non-stationary, certain techniques can still render it stationary (depending on the type of non-stationarity). A common approach is to difference the data to eliminate any trends in the time series (i.e., $x_t^{'}=x_{t}-x_{t-1}$). This can be done several times if necessary. A problem with this is that it could potentially change the interpretation of any resulting GC, specifically in the frequency domain where differencing acts as a high filter. Other commonly used methods are smoothing or filtering the data, but in the end if there are causal relation that vary over time these technique will make it impossible to find those. An alternative approach could be to divide the data into time-series that are (sufficiently) locally stationary, and apply the GC per time window. This method makes is possible to study changes in connectivity over time, but it is of course not without its own problems, the most important of which is the choice of the time window. If the time window is too short, there will not be enough data points to make a decent estimation.

Bivariate spectral Granger causality NxN

To estimate GC in frequency domain, drag the the simulated signals to the Process1 tab.

Click on [Run] to open the Pipeline editor, and select Connectivity » Bivariate Granger causality (spectral) NxN.

As with the temporal GC, right-click on the SpGranger file, and select Display as image [NxN]. Also, to view the spectral profile for each bivariate connection pair, right-click on the SpGranger file, then select Power Spectrum.

Where the first variable in the legend refers to the sender and the second to the receiver of information. As we can see from the figure the large coupling strength from signal 2 to signal 3 is highest in higher frequency ranges. You can select your desired connectivity pair, and then press enter to view it in a separate window.

Process options

With respect to (temporal) GC, spectral GC presents two extra parameters:

Maximum frequency resolution: Width of frequency bins in PSD estimation. A large number of steps (high resolution) will increase the number of statistical comparisons. Note that the frequency resolution has to be less than half the highest frequency of interest. In the case of conditional GC, computed with the MVGC Toolbox, the frequency resolution is automatically defined.

Highest frequency of interest: Highest frequency for the analysis. Based on the Nyquist theorem, it should be <= Fs/2.

On the hard drive

The output of the Bivariate Granger causality (spectral) NxN is a NxN matrix saved as TF domain and it represents the coupling strength between variables. In contrast to the temporal GC, this time the NxN matrix is frequency-resolved. You can see these contents with right-click on the SpGranger file then File » View file contents.

Additional documentation

Articles

Granger CW.

Investigating causal relations by econometric models and cross-spectral methods.

Econometrica: journal of the Econometric Society. 1969;424–38.Geweke JF.

Measures of conditional linear dependence and feedback between time series.

Journal of the American Statistical Association. 1984;79(388):907–15.Bressler SL, Seth AK.

Wiener–Granger causality: a well established methodology.

Neuroimage. 2011 Sep 15;58(2):323-9.Baccalá LA, Sameshima K.

Partial directed coherence: a new concept in neural structure determination.

Biol Cybern. 2001 May 11;84(6):463–74.Barnett L, Seth AK.

The MVGC multivariate Granger causality toolbox: A new approach to Granger-causal inference.

Journal of Neuroscience Methods. 2014.

Forum discussions

Connectivity matrix storage:http://neuroimage.usc.edu/forums/showthread.php?1796