Hi

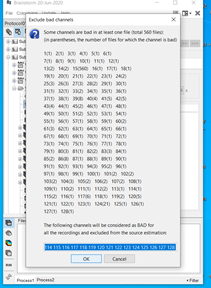

I ran the below script to calculate sources for my subjects. After a few subjects the process stops due to one subject having no good channels. Some of the subjects listed in the report have numerous bad channels. I got the below messagein the MatLab command window. I have not marked any channels as bad and relied solely on ICA/SSP and removed channels +-100uv to deal with artifacts. I noticed on the forum someone else had this problem due to not calculating a noise covariance matrix. I have already calculated both noise and data covariance matrices. Can you advise what I should do?

% Script generated by Brainstorm (20-Jun-2020)

% Input files

sFiles = ;

% Start a new report

bst_report('Start', sFiles);

% Process: Select files using search query

sFiles = bst_process('CallProcess', 'process_select_search', , , ...

'search', '(([path CONTAINS "resample"] AND [name CONTAINS "WAvg:"]))');

% Process: Compute sources [2018]

sFiles = bst_process('CallProcess', 'process_inverse_2018', sFiles, , ...

'output', 1, ... % Kernel only: shared

'inverse', struct(...

'Comment', 'MN: EEG', ...

'InverseMethod', 'minnorm', ...

'InverseMeasure', 'amplitude', ...

'SourceOrient', {{'fixed'}}, ...

'Loose', 0.2, ...

'UseDepth', 1, ...

'WeightExp', 0.5, ...

'WeightLimit', 10, ...

'NoiseMethod', 'reg', ...

'NoiseReg', 0.1, ...

'SnrMethod', 'fixed', ...

'SnrRms', 1e-06, ...

'SnrFixed', 3, ...

'ComputeKernel', 1, ...

'DataTypes', {{'EEG'}}));

% Save and display report

ReportFile = bst_report('Save', sFiles);

bst_report('Open', ReportFile);

% bst_report('Export', ReportFile, ExportDir);

BST_INVERSE (2018) > NOTE: Cross Covariance between sensor modalities IS NOT CALCULATED in the noise covariance matrix

BST_INVERSE > Rank of the 'EEG' channels, keeping 116 noise eigenvalues out of 118 original set

BST_INVERSE > Using the 'reg' method of covariance regularization.

BST_INVERSE > Diagonal of 10.0% of the average eigenvalue added to covariance matrix.

BST_INVERSE > Using 'fixed' surface orientations

BST_INVERSE > Rank of leadfield matrix is 116 out of 118 components

BST_INVERSE > Confirm units

BST_INVERSE > Assumed RMS of the sources is 1.87 nA-m

BST_INVERSE > Assumed SNR is 9.0 (9.5 dB)