Contents

- Tutorial 1: Create a new protocol

- Tutorial 2: Import the subject anatomy

- Tutorial 3: Display the anatomy

- Tutorial 4: Channel file / MEG-MRI coregistration

- Tutorial 5: Review continuous recordings

- Tutorial 6: Multiple windows

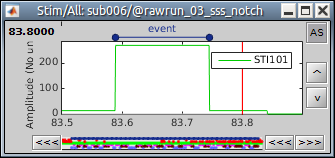

- Tutorial 7: Event markers

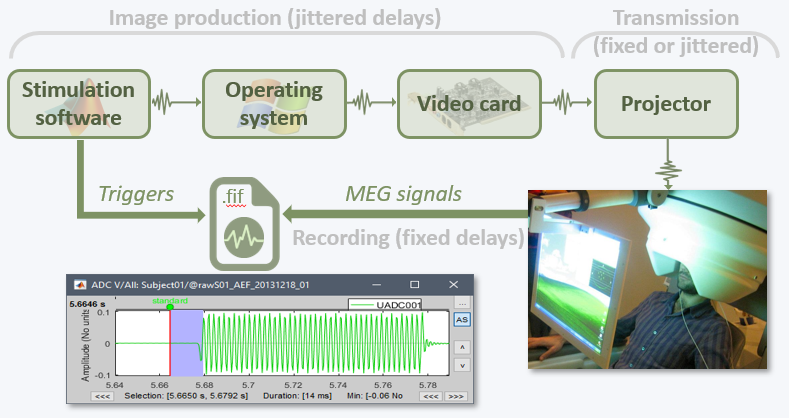

- Tutorial 8: Stimulation delays

- Tutorial 9: Select files and run processes

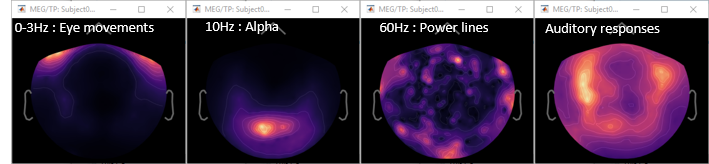

- Tutorial 10: Power spectrum and frequency filters

- Tutorial 11: Bad channels

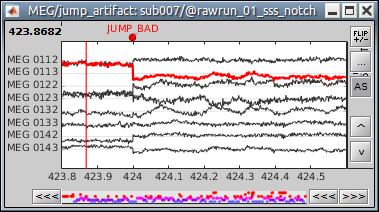

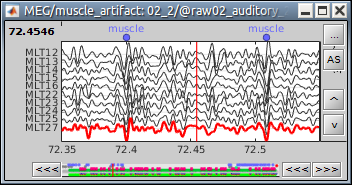

- Tutorial 12: Artifact detection

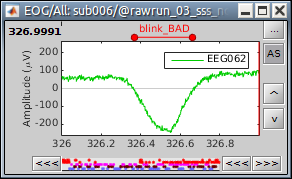

- Tutorial 13: Artifact cleaning with SSP

- Tutorial 14: Additional bad segments

- Tutorial 15: Import epochs

- Tutorial 16: Average response

Tutorial 1: Create a new protocol

Authors: Francois Tadel, Elizabeth Bock, Sylvain Baillet

Contents

- How to read these tutorials

- Presentation of the experiment

- Brainstorm folders

- Starting Brainstorm for the first time

- Main interface window

- The text is too small

- Database structure

- Database files

- Create your first protocol

- Protocol exploration

- Set up a backup

- Changing the temporary folder

- Summary

- Roadmap

- Moving a database

How to read these tutorials

The goal of these introduction tutorials is to guide you through most of the features of the software. All the pages use the same example dataset. The results of one section are most of the time used in the following section, so read these pages in the correct order.

Some pages may contain too many details for your level of interest or competence. The sections marked as [Advanced] are not required for you to follow the tutorials until the end. You can skip them the first time you go through the documentation. You will be able to get back to the theory later if you need.

Please follow first these tutorials with the data we provide. This way you will be able to focus on learning how to use the software. It is better to start with some data that is easy to analyze. After going through all the tutorials, you should be comfortable enough with the software to start analyzing your own recordings.

You will observe minor differences between the screen captures presented in these pages and what you obtain on your computer: different colormaps, different values, etc. The software being constantly improved, some results changed since we produced the illustrations. When the changes are minor and the interpretation of the figures remain the same, we don't necessarily update the images in the tutorial.

If you are interested only in EEG or intra-cranial recordings, don't think that a MEG-based tutorial is not adapted for you. Most of the practical aspects of the data manipulation is very similar in EEG and MEG. First start by reading these introduction tutorials using the MEG example dataset provided, then when you are familiar with the software, go through the tutorial "EEG and Epilepsy" to get some more details about the processing steps that are specific for EEG, or read one of the SEEG/ECOG tutorials available in the section "Other analysis scenarios".

Presentation of the experiment

All the introduction tutorials are based on a simple auditory oddball experiment:

- One subject, two acquisition runs of 6 minutes each.

- Subject stimulated binaurally with intra-aural earphones.

- Each run contains 200 regular beeps and 40 easy deviant beeps.

- Recordings with a CTF MEG system with 275 axial gradiometers.

Anatomy of the subject: 1.5T MRI, processed with FreeSurfer 5.3.

- More details will be given about this dataset along the process.

Full dataset description available on this page: Introduction dataset.

Brainstorm folders

Brainstorm needs different directories to work properly. If you put everything in the same folder, you would run into many problems. Try to understand this organization before creating a new database.

1. Program directory: "brainstorm3"

- Contains all the program files: Matlab scripts, compiled binaries, templates, etc.

- There is no user data in this folder.

- You can delete it and replace it with a newer version at anytime, your data will be safe.

- Recommended location:

Windows: Documents\brainstorm3

Linux: /home/username/brainstorm3

MacOS: Documents/brainstorm3

2. Database directory: "brainstorm_db"

- Created by user.

- Contains all the Brainstorm database files.

- Managed by the application: do not move, delete or add files by yourself.

- Recommended location:

Windows: Documents\brainstorm_db

Linux: /home/username/brainstorm_db

MacOS: Documents/brainstorm_db

3. User directory: ".brainstorm"

Created at Brainstorm startup. Typical location:

Windows: C:\Users\username\.brainstorm

Linux: /home/username/.brainstorm

MacOS: /Users/username/.brainstorm

- Contains:

brainstorm.mat: Brainstorm user preferences.

defaults/: Anatomy templates downloaded by Brainstorm.

mex/: Some mex files that have to be recompiled.

plugins/: Plugins downloaded by Brainstorm (see tutorial Plugins).

process/: Personal process folder (see tutorial How to write your own process).

reports/: Execution reports (see tutorial Run processes).

tmp/: Temporary folder, emptied every time Brainstorm is started with user confirmation.

You may have to change the location of the temporary folder if you have a limited amount of storage or a limited quota in your home folder (see below).

Be sure that the paths to the Program, Database, and User directories do not contain special characters. See related forum post.

4. Original data files:

- Recordings you acquired and you want to process with Brainstorm.

Put them wherever you want but not in any of the previous folders.

Starting Brainstorm for the first time

If you haven't read the installation instructions, do it now: Installation.

Start Brainstorm from Matlab or with the compiled executable.

BST> Starting Brainstorm: BST> ================================= BST> Version: 28-Jan-2015 BST> Checking internet connectivity... ok BST> Compiling main interface files... BST> Emptying temporary directory... BST> Deleting old process reports... BST> Loading configuration file... BST> Initializing user interface... BST> Starting OpenGL engine... hardware BST> Reading plugins folder... BST> Loading current protocol... BST> =================================

- Read and accept the license file.

- Select your Brainstorm database directory (brainstorm_db).

If you do something wrong and don't know how to go back, you can always re-initialize Brainstorm by typing "brainstorm reset" in the Matlab command window, or by clicking on [Reset] in the software preferences (menu File > Edit preferences).

Main interface window

The Brainstorm window described below is designed to remain on one side of the screen. All the space of the desktop that is not covered by this window will be used for opening other figures.

Do not try to maximize this window, or the automatic management of the data figures might not work correctly. Keep it on one side of your screen, just large enough so you can read the file names in the database explorer.

The text is too small

If you have a high-resolution screen, the text and icons in the Brainstorm window may not scale properly, leading the interface to be impossible to use. Select the menu File > Edit preferences, the slider at the bottom of the option window lets you increase the ratio of the Brainstorm interface. If it doesn't help, try changing the scaling options in your operating system preferences.

Database structure

Brainstorm allows you to organize your recordings and analysis with three levels of definition:

Protocol

- Group of datasets that have to be processed or displayed together.

- A protocol can include one or several subjects.

Some people would prefer to call this experiment or study.

- You can only open one protocol at a time.

- Your Brainstorm database is a collection of protocols.

Subject

- A person who participated in a given protocol.

- A subject contains two categories of information: anatomy and functional data.

Anatomy: Includes at least an MRI volume and some surfaces extracted from the MRI.

Functional data: Everything that is related with the MEG/EEG acquisition.

- For each subject, it is possible to use either the actual MRI of the person or one of the anatomy templates available in Brainstorm.

Sub-folders

- For each subject, the functional files can be organized in different sub-folders.

- These folders can represent different recordings sessions (aka acquisition runs) or different experimental conditions.

The current structure of the database does not allow more than one level of sub-folders for each subject. It is not possible to organize the files by session AND by condition.

Database files

- The database folder "brainstorm_db" is managed completely from the graphic user interface (GUI).

- All the files in the database have to be imported through the GUI. Do not try to copy files by yourself in the brainstorm_db folder, it won't work.

- Everything in this folder is stored in Matlab .mat format, with the following architecture:

Anatomy data: brainstorm_db/protocol_name/anat/subject_name

Functional data: brainstorm_db/protocol_name/data/subject_name/subfolder/

- Most of the files you see in the database explorer in Brainstorm correspond to files on the hard drive, but there is no one-to-one correspondence. There is extra information stored in each directory, to save properties, comments, default data, links between different items, etc. This is one of the reasons for which you should not try to manipulate directly the files in the Brainstorm database directory.

- The structure of the database is saved in the user preferences, so when you start the program or change protocol, there is no need to read again all the files on the hard drive.

If Brainstorm or Matlab crashes before the database structure is correctly saved, the files that are displayed in the Brainstorm database explorer may differ from what is actually on the disk. When this happens, you can force Brainstorm to rebuild the structure from the files on the hard drive: right-click on a folder > Reload.

Create your first protocol

Menu File > New protocol.

Edit the protocol name and enter: "TutorialIntroduction".

It will automatically update the anatomy and datasets paths. Do not edit manually these paths, unless you work with a non-standard database organization and know exactly what you are doing.- Default properties for the subjects: These are the default settings that are used when creating new subjects. It is then possible to override these settings for each subject individually.

Default anatomy: (MRI and surfaces)

No, use individual anatomy:

Select when you have individual MRI scans for all the participants of your study.Yes, use default anatomy:

Select when you do not have individual scans for the participants, and you would like to use one of the anatomy templates available in Brainstorm.

Default channel file: (Sensors names and positions)

No, use one channel file per acquisition run: Default for all studies

Different head positions: Select this if you may have different head positions for one subject. This is usually not the case in EEG, where the electrodes stay in place for all the experiment. In MEG, this is a common setting: one recording session is split in multiple acquisition runs, and the position of the subject's head in the MEG might be different between two runs.

Different number of channels: Another use case is when you have multiple recordings for the same subject that do not have the same number of channels. You cannot share the channel file if the list of channels is not strictly the same for all the files.

Different cleaning procedures: If you are cleaning artifacts from each acquisition run separately using SSP or ICA projectors, you cannot share the channel file between them (the projectors are saved in the channel file).Yes, use one channel file per subject: Use with caution

This can be a setting adapted to EEG: the electrodes are in the same position for all the files recorded on one subject, and the number of channels is the same for all the files. However, to use this option, you should not be using SSP/ICA projectors on the recordings, or they should be computed for all the files at once. This may lead to some confusion and sometimes to manipulation errors. For this reason, we decided not to recommend this setting.Yes, use only one global channel file: Not recommended

This is never a recommended setting. It could be used in the case of an EEG study where you use only standard EEG positions on a standard anatomy, but only if you are not doing any advanced source reconstruction. If you share the position of the electrodes between all the subjects, it will also share the source models, which are dependent on the quality of the recordings for each subject. This is complicated to understand at this level, it will make more sense later in the tutorials.

In the context of this study, we are going to use the following settings:

No, use individual anatomy: Because we have access to a T1 MRI scan of the subject.

No, use one channel file per condition/run: The typical MEG setup.

Click on [Create].

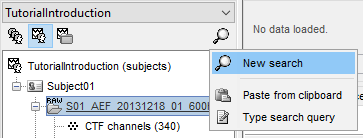

Protocol exploration

The protocol is created and you can now see it in the database explorer. It is represented by the top-most node in the tree.

- You can switch between anatomy and functional data with the three buttons just above the database explorer. Read the tooltips of the buttons to see which one does what.

In the Anatomy view, there is a Default anatomy node. It contains the ICBM152 anatomy, distributed by the Montreal Neurological Institute (MNI), which is one of the template anatomy folders that are distributed with Brainstorm.

The Default anatomy node contains the MRI and the surfaces that are used for the subjects without an individual anatomy, or for registering multiple subjects to the same anatomy for group analysis.

There are no subjects in the database yet, so the Functional data views are empty.

Everything you can do with an object in the database explorer (anatomy files, subjects, protocol) is accessible by right-clicking on it.

Set up a backup

Just like any computer work, your Brainstorm database is always at risk. Software bugs, computer or network crashes and manipulation errors can cause the loss of months of data curation and computation. If the database structure gets corrupted or if you delete or modify accidentally some files, you might not be able to get your data back. There is no undo button!

You created your database, now take some time to find a way to make it safe. If you are not familiar with backuping systems, watch some online tutorials explaining how to set up an automatic daily or weekly backup of your sensitive data. It might seem annoying and useless now, but could save you weeks in the future.

Changing the temporary folder

Some processes need to create temporary files on the hard drive. For example, when epoching MEG/EEG recordings, Brainstorm would first create a temporary folder "import_yymmdd_hhmmss", store all the epochs in it, then move them to the database when the epoching process is completed. The name of the temporary folder indicates its creation time (year/month/day_hour_minutes_seconds). At the end of the process, all the temporary files should be deleted automatically.

The default folder where Brainstorm stores its temporary files is located in the user folder ($HOME/.brainstorm/tmp/), so before importing recordings or calculating large models, you have to make sure you have enough storage space available.

If you work on a centralized network where all the computers are sharing the same resources, the system admins may impose limited disk quotas to all users and encourage them to use local hard drives instead of the limited and shared user folder. In such context, Brainstorm may quickly fill up your limited quota and at some point block your user account.

If the amount of storage space you have for your user folder is limited (less than 10Gb), you may have to change the temporary folder used by Brainstorm. Select the menu File > Edit preferences and set the temporary directory to a folder that is local to your computer, in which you won't suffer from any storage limitation.

If a process crashes or is killed before it deletes its temporary files, they would remain in the temporary folder until explicitly deleted. When starting Brainstorm, you would always get offered to delete the previous temporary files: always agree to it, unless they correspond to files generated by another session running simultaneously. Alternatively, you can delete these temporary files by clicking on the Empty button in the same preferences window. More information in the Scripting tutorial.

Summary

- Different folders for:

the program (brainstorm3).

the database (brainstorm_db).

your original recordings.

- Never modify the contents of the database folder by yourself.

- Do not put the original recordings in any of the Brainstorm folders, import them with the interface.

- Do not try to maximize the Brainstorm window: keep it small on one side of your screen.

Roadmap

The workflow described in these introduction tutorials include the following steps:

- Importing the anatomy of the subjects, the definition of the sensors and the recordings.

- Pre-processing, cleaning, epoching and averaging the EEG/MEG recordings.

- Estimating sources from the imported recordings.

- Computing measures from the brain signals of interest in sensor space or source space.

Moving a database

If you are running out of disk space or need to share your database with someone else, you may need to copy or move your protocols to a different folder or drive. Each protocol is handled independently by Brainstorm, therefore in order to move the entire database folder (brainstorm_db), you need to repeat the operations below for each protocol in your database.

Copy the raw files

The original continuous files are not saved in the Brainstorm database. The "Link to raw files" depend on a static path on your local computer and cannot be moved easily to a new computer. You can copy them inside the database before moving the database to a different computer/hard drive using the menu: File > Export protocol > Copy raw files to database. This will make local copies in .bst format of all your original files. The resulting protocol would be larger but portable. This can also be done file by file: right-click > File > Copy to database.

Export a protocol

The easiest option to share a protocol with a collaborator is to export it as a zip file.

Export: Use the menu File > Export protocol > Export as zip file.

Avoid using spaces and special characters in the zip file name.Import: Use the menu File > Load protocol > Load from zip file.

The name of the protocol created in the brainstorm_db folder is the name of the zip file. If there is already a protocol with this label, Brainstorm would return an error. To import the protocol as a different name, you only need to rename the zip file before importing it.Size limitation: This solution is limited to smaller databases: creating zip files larger than a few Gb can take a lot of time or even crash. For larger databases, prefer the other options below.

Export a subject

Similar as the protocol export, but extracts only the files needed by a single subject.

Export: Right-click on the subject > File > Export subject.

Import as new protocol: Use the menu File > Load protocol > Load from zip file.

Import in an existing protocol: Use the menu File > Load protocol > Import subject from zip.

Move a protocol

To move a protocol to a different location:

- [Optional] Set up a backup of your entire brainstorm_db folder if your haven't done it yet. There will be no undo button to press if something bad happens.

- [Optional] Copy the raw files to the database (see above)

Unload: Menu File > Delete protocol > Only detach from database.

Move: Move the entire protocol folder to a different location. Remember a protocol folder should be located in the "brainstorm_db" folder and should contain only two subfolders "data" and "anat". Never move or copy a single subject manually.

Load: Menu File > Load protocol > Load from folder > Select the new location of the protocol

- If you want to move the entire "brainstorm_db" folder at once, make sure you detach all the protocols in your Brainstorm installation first.

Duplicate a protocol

To duplicate a protocol in the same computer:

Copy: Make a full copy of the protocol to duplicate in the brainstorm_db folder, e.g. TutorialIntroduction => TutorialIntroduction_copy. Avoid using any space or special character in the new folder name.

Load: Menu File > Load protocol > Load from folder > Select the new protocol folder

Tutorial 2: Import the subject anatomy

Authors: Francois Tadel, Elizabeth Bock, Sylvain Baillet, Chinmay Chinara

Brainstorm orients most of its database organization and processing stream for handling anatomical information together with the MEG/EEG recordings, because its primary focus was to estimate brain sources from MEG/EEG, which ideally requires an accurate spatial modelling of the head and sensors.

If you don't have anatomical scans of your subjects or are not interested in any spatial display, various solution will be presented along the tutorials, starting from the last section of this page. Be patient and follow everything as instructed, you will get to the information you need.

Contents

Download

The dataset we will use for the introduction tutorials is available online.

Go to the Download page of this website, and download the file: sample_introduction.zip

- Unzip it in a folder that is not in any of the Brainstorm folders (program folder or database folder).

- This is really important that you always keep your original data files in a separate folder: the program folder can be deleted when updating the software, and the contents of the database folder is supposed to be manipulated only by the program itself.

Create a new subject

The protocol is currently empty. You need to add a new subject before you can start importing data.

- Switch to the anatomy view (first button just above the database explorer).

Right-click on the top folder TutorialIntroduction > New subject.

Alternatively: Use the menu File > New subject.

The window that opens lets you edit the subject name and settings. It offers again the same options for the default anatomy and channel file: you can redefine for one subject the default values set at the protocol level if you need to. See previous tutorial for help.

- Keep all the default settings and click on [Save].

Right-click doesn't work

If the right-click doesn't work anywhere in the Brainstorm interface and you cannot get to see the popup menus in the database explorer, try to connect a standard external mouse with two buttons. Some Apple pointing devices do not interact very well with Java/Matlab.

Alternatively, try to change the configuration of your trackpad in the system preferences.

Import the anatomy

For estimating the brain sources of the MEG/EEG signals, the anatomy of the subject must include at least three files: a T1-weighted MRI volume, the envelope of the cortex and the surface of the head.

Brainstorm cannot extract the cortex envelope from the MRI, you have to run this operation with an external program of your choice. The results of the MRI segmentation obtained with the following programs can be automatically imported: FreeSurfer, BrainSuite, BainVISA, CAT12, and CIVET. CAT is the only application fully interfaced with Brainstorm, and available for download as a Brainstorm plugin. However, FreeSurfer is more considered as a reference in this domain, therefore this is the solution we decided to demonstrate in these tutorials.

The anatomical information of this study was acquired with a 1.5T MRI scanner, the subject had a marker placed on the left cheek. The MRI volume was processed with FreeSurfer 7.1, the result of this automatic segmentation process is available in the downloaded folder sample_introduction/anatomy.

- Make sure that you are still in the anatomy view for your protocol.

Right-click on the subject folder > Import anatomy folder:

Set the file format: FreeSurfer + Volume atlases

Select the folder: sample_introduction/anatomy

- Click on [Open]

Number of vertices of the cortex surface: 15000 (default value).

This option defines the number of points that will be used to represent the cortex envelope. It will also be the number of electric dipoles we will use to model the activity of the brain. This default value of 15000 was chosen empirically as a good balance between the spatial accuracy of the models and the computation speed. More details later in the tutorials.

The MRI views should be correct (axial/coronal/sagittal), you just need to make sure that the marker on the cheek is really on the left of the MRI. Then you can proceed with the fiducial selection.

Using the MRI Viewer

To help define these fiducial points, let's start with a brief description of the MRI Viewer:

Navigate in the volume:

- Click anywhere on the MRI slices to move the cursor.

- Use the sliders below the views.

- Use the mouse wheel to scroll through slices (after clicking on the view to select it).

On a MacBook pad, use the two finger-move up/down to scroll.

Zoom: Use the magnifying glass buttons at the bottom of the figure, or the corresponding shortcuts (keyboard [+]/[-], or [CTRL]+mouse wheel).

Image contrast: Click and hold the right mouse button on one image, then move up and down.

Select a point: Place the cursor at the spot you want and click on the corresponding [Set] button.

Display the head surface: Click on the button "View 3D head surface" to compute and display the head surface. Click on the surface to move the coordinates of the cursor in the MRI Viewer figure. When the fiducials are not defined, they appear as floating a few centimeters away from the head.

More information about all the coordinates displayed in this figure: CoordinateSystems

- Popup operations (right-click on the figure):

Colormap: Edit the colormap, detailed in another tutorial.

Anatomical atlas: Select, show and hide anatomical parcellations (aka anatomical atlases).

Views: Set one of the predefined orientation.

Apply MNI coordinates to all figures: Set the same MNI coordinates to other MRI viewer figures.

Apply SCS coordinates to all figures: Set the same SCS coordinates to other MRI viewer figures.

View in 3D orthogonal slices: Open MRI volume as 3D orthogonal slices, and set the same slices as in the MRI viewe.

Snapshots: Save images or movies from this figure.

Figure: Change some of the figure options or edit it using the Matlab tools.

- Note the indications in the right part of the popup menu, they represent the keyboard shortcut for each menu.

Fiducial points

Brainstorm uses a few reference points defined in the MRI to align the different files:

Required: Three points to define the Subject Coordinate System (SCS):

- Nasion (NAS), Left ear (LPA), Right ear (RPA)

- This is used to register the MEG/EEG sensors on the MRI.

Optional: Three additional anatomical landmarks (NCS):

- Anterior commissure (AC), Posterior commissure (PC) and any interhemispheric point (IH).

- Computing the MNI normalization sets these points automatically (see below), therefore setting them manually is not required.

For instructions on finding these points, read the following page: CoordinateSystems.

Nasion (NAS)

In this study, we used the real nasion position instead of the CTF coil position.

MRI coordinates: 127, 213, 139

Left ear (LPA)

In this study, we used the connection points between the tragus and the helix (red dot on the CoordinateSystems page) instead of the CTF coil position or the left and right preauricular points.

MRI coordinates: 52, 113, 96

Right ear (RPA)

MRI coordinates: 202, 113, 91

Anterior commissure (AC)

MRI coordinates: 127, 119, 149

Posterior commissure (PC)

MRI coordinates: 128, 93, 141

Inter-hemispheric point (IH)

This point can be anywhere in the mid-sagittal plane, these coordinates are just an example.

MRI coordinates: 131, 114, 206

Type the coordinates

If you have the coordinates of the fiducials already written somewhere, you can type or copy-paste them instead of the pointing at them in with the cursor. Right-click on the figure > Edit fiducials positions > MRI coordinates.

Validation

- Once you are done with the fiducial selection, click on the [Save] button, at the bottom-right corner of the MRI Viewer figure.

The automatic import of the FreeSurfer folder resumes. At the end you get many new files in the database and a 3D view of the cortex and scalp surface. Here again you can note that the marker is visible on the left cheek, as expected.

- The next tutorial will describe these files and explore the various visualization options.

Close all figures and clear memory: Use this button in the toolbar of the Brainstorm window to close all the open figures at once and to empty the memory from all the temporary data that the program keeps in memory for faster display.

Graphic bugs

If you do not see the cortex surface through the head surface, or if you observe any other issue with the 3D display, there might be an issue with the OpenGL drivers. You may try the following options:

- Update the drivers for your graphics card.

- Upgrade your version of Matlab.

Run the compiled version of Brainstorm (see Installation).

Turn off the OpenGL hardware acceleration: Menu File > Edit preferences > Software or Disabled.

Send a bug report to MathWorks.

For Linux users with an integrated GPU and NVIDIA GPU, if you experience the troubles above, or the slow navigation in the 3D display (usually with 2 or more surfaces). Verify that you are using the NVIDIA GPU as primary GPU. More information depending on your distribution: Ubuntu, Debian and Arch Linux.

MNI normalization

For comparing results with the literature or with other imaging modalities, the normalized MNI coordinate system is often used. To be able to get "MNI coordinates" for individual brains, an extra step of normalization is required.

To compute a transformation between the individual MRI and the ICBM152 space, you have two available options, use the one of your choice:

In the MRI Viewer: Click on the link "Click here to compute MNI normalization".

In the database explorer: Right-click on the MRI > MNI normalization.

Select the first option maff8: This method is embedded in Brainstorm and does not require the installation of SPM12. However, it requires the automatic download of the file SPM12 Tissue Probability Maps. If you do not have access to internet, see the instructions on the Installation page.

It is based on an affine co-registration with the MNI ICBM152 template from the SPM software, described in the following article: Ashburner J, Friston KJ, Unified segmentation, NeuroImage 2005.

Note that this normalization does not modify the anatomy, it just saves a transformation that enables the conversion between Brainstorm coordinates and MNI coordinates. After computing this transformation, you have access to one new line of information in the MRI Viewer.

This operation also sets automatically some anatomical points (AC, PC, IH) if not defined yet. After the computation, make sure they are correctly positioned. You can run this computation while importing the anatomy, when the MRI viewer is displayed for the first time, this will save you the trouble of marking the AC/PC/IH points manually.

MacOS troubleshooting

Error "mexmaci64 cannot be opened because the developer cannot be verified":

Alternatives

The head surface looks bad: You can try computing another one with different properties.

No individual anatomy: If you do not have access to an individual MR scan of the subject, or if its quality is too low to be processed with FreeSurfer, you have other options:

If you do not have any individual anatomical data: Use the default anatomy

If you have a digitized head shape of the subject: Warp the default anatomy

Other options for importing the FreeSurfer anatomical segmentation:

Automated import: We selected the menu Import anatomy folder for a semi-manual import, in order to select manually the position of the anatomical fiducials and the number of points of the cortex surface. If you are not interested in setting accurately the positions of the fiducials, you can use the menu Import anatomy folder (auto): it computes the linear MNI normalization first and use default fiducials defined in MNI space, and uses automatically 15000 vertices for the cortex.

FreeSurfer options: We selected the file format FreeSurfer + Volume atlases for importing the ASEG parcellation in the database. This slows down the import and increases the size on the hard drive. If you know you won't use it, select FreeSurfer instead. A third menu is avalaible to also import the cortical thickness as source files in the database.

Tutorial 3: Display the anatomy

Authors: Francois Tadel, Elizabeth Bock, Sylvain Baillet

Contents

Anatomy folder

The anatomy of the subject "Subject01" should now contain all the files Brainstorm could import from the FreeSurfer segmentation results:

MRI: T1-weighted MRI, resampled and re-aligned by FreeSurfer.

ASEG / DKT / Desikan-Killiany / Destrieux: Volume parcellations (including subcortical regions)

Head mask: Head surface, generated by Brainstorm.

If this doesn't look good for your subject, you can recalculate another head surface using different parameters: right-click on the subject folder > Generate head surface.Cortex_336231V: High-resolution pial envelope generated by FreeSurfer.

Cortex_15002V: Low-resolution pial envelope, downsampled from the original one by Brainstorm.

Cortex_cereb_17005V: Low-res pial envelope + cerebellum surface extracted from ASEG

White_*: White matter envelope, high and low resolution.

Mid_*: Surface that represents the mid-point between the white and cortex envelopes.

Subcortical: Save FreeSurfer subcortical regions as in the ASEG volume, but tesselated as surfaces.

For more information about the files generated by FreeSurfer, read the FreeSurfer page.

Default surfaces

- There are four possible surface types: cortex, inner skull, outer skull, head.

- For each type of surface, one file is selected as the one to use by default for all the operations.

This selected surface is displayed in green.

- Here, there is only one "head" surface, which is selected.

The mid, cortex and white surfaces can all be used as "cortex" surfaces, only one can be selected at a time. By default, the low-resolution cortex should be selected and displayed in green.

To select a different cortex surface, you can double-click on it or right-click > Set as default.

MRI Viewer

Right-click on the MRI to get the list of the available display menus:

Open the MRI Viewer. This interface was already introduced in the previous tutorial. It corresponds to the default display menu if you double-click on the MRI from the database explorer. Description of the window:

MIP Anatomy: Maximum Intensity Projection. When this option is selected, the MRI viewer shows the maximum intensity value across all the slices in each direction. This maximum does not depend on the selected slice, therefore if you move the cursor, the image stays the same.

Neurological/Radiological: There are two standard orientations for displaying medical scans. In the neurological orientation, the left hemisphere is on the left of the image, in the radiological orientation the left hemisphere is on the right of the image.

Coordinates: Position of the cursor in different coordinate systems. See: CoordinateSystems

Colormap: Click on the colorbar and move up/down (brightness) or left/right (contrast)

Popup menu: All the figures have additional options available in a popup menu, accessible with a right-click on the figure. The colormap options will be described later in the tutorials, you can test the other options by yourself.

MRI contact sheets

You can get collections of slices in any direction (axial, coronal or sagittal) with the popup menus in the database explorer or the MRI Viewer figure.

Zoom: mouse wheel (or two finger-move on a MacBook pad)

Move in zoomed image: click + move

Adjust contrast: right click + move up/down

MRI in 3D

Right-click on the MRI file in the database explorer > Display > 3D orthogonal slices.

Simple mouse operations:

Rotate: Click + move. Note that two different types of rotations are available: at the center of the figure the object will follow you mouse, on the sides it will do a 2D rotation of the image.

Zoom: Mouse wheel, or two finger-move on a MacBook pad.

Move: Left+right click + move (or middle-click + move).

Colormap: Click on the colorbar and move up/down (brightness) or left/right (contrast).

Reset view: Double click anywhere on the figure.

Reset colormap: Double-click on the colorbar.

Move slices: Right click on the slice to move + move.

(or use the Resect panel in the Surface tab)

- Popup operations (right-click on the figure):

Colormap: Edit the colormap, detailed in another tutorial.

MRI display: For now, contains mostly the MIP option (Maximum Intensity Projection).

Get coordinates: Pick a point in any 3D view and get its coordinates.

Snapshots: Save images or movies from this figure.

Figure: Change some of the figure options or edit it using the Matlab tools.

Views: Set one of the predefined orientation.

- Note the indications in the right part of the popup menu, they represent the keyboard shortcut for each menu.

- Keyboard shortcuts:

Views shortcuts (0,1,2...9 and [=]): Remember them, they will be very useful when exploring the cortical sources. To switch from left to right, it is much faster to press a key than having to rotate the brain with the mouse.

Zoom: Keys [+] and [-] for zooming in and out.

Move slices: [x]=Sagittal, [y]=Coronal, [z]=Axial, hold [shift] for reverse direction.

- Surface tab (in the main Brainstorm window, right of the database explorer):

- This panel is primarily dedicated to the display of the surfaces, but some controls can also be useful for the 3D MRI view.

Transparency: Changes the transparency of the slices.

Smooth: Changes the background threshold applied to the MRI slices. If you set it zero, you will see the full slices, as extracted from the volume.

Resect: Changes the position of the slices in the three directions.

Surfaces

To display a surface you can either double-click on it or right-click > Display. The tab "Surface" contains buttons and sliders to control the display of the surfaces.

- The mouse and keyboard operations described for the 3D MRI view also apply here.

Smooth: Inflates the surface to make all the parts of the cortex envelope visible.

This is just a display option, it does not actually modify the surface.Color: Changes the color of the surface.

Sulci: Shows the bottom of the cortical folds with a darker color. We recommend to keep this option selected for the cortex, it helps for the interpretation of source locations on smoothed brains.

Edge: Display the faces of the surface tesselation.

Resect: The sliders and the buttons Left/Right/Struct at the bottom of the panel allow you to cut the surface or reorganize the anatomical structures in various ways.

Multiple surfaces: If you open two surfaces from the same subject, they will be displayed on the same figure. Then you need to select the surface you want to edit before changing its properties. The list of available surfaces is displayed at the top of the Surface tab.

At the bottom of the Surface tab, you can read the number of vertices and faces in the tesselation.

Get coordinates

- Close all the figures. Open the cortex surface again.

- Right-click on the 3D figure, select "Get coordinates".

- Click anywhere on the cortex surface: a yellow cross appears and the coordinates of the point are displayed in all the available coordinates systems.

You can click on [View/MRI] to see where this point is located in the MRI, using the MRI Viewer.

Subcortical regions: Volume

The standard FreeSurfer segmentation pipeline generates multiple volume parcellations of anatomical regions, all including the ASEG subcortical parcellation. Double-click on a volume parcellation to open it for display. This opens the MRI Viewer with two volumes: the T1 MRI as the background, and the parcellation as a semi-transparent overlay.

- Adjust the transparency of the overlay from the Surface tab, slider Transp.

The name of the region under the cursor appears at the top-right corner. The integer before this name is the label of the ROI, ie. the integer value of the voxel under the cursor in the parcellation volume.

- Close the MRI viewer.

- Double-click again on the subject's MRI to open it in the MRI viewer.

- Observe that the anatomical label is also present at the top-right corner of this figure; in this case, the integer reprents the voxel value of the displayed MRI. This label information comes from the ASEG file: whenever there are volume parcellations available for the subject, one of them is loaded in the MRI Viewer by default. The name of the selected parcellation is displayed in the figure title bar.

You can change the selected parcellation with the right-click popup menu Anatomical atlas. You can change the parcellation scheme, disable its use to make the MRI work faster, or show the parcellation volume as an overlay (menu Show atlas). More information in the tutorial Using anatomy templates.

Subcortical regions: Surface

Brainstorm reads the ASEG volume labels and tesselates some of these regions, then groups all the meshes in a large surface file where the regions are identified in an atlas called "Structures". It identifies: 8 bilateral structures (accumbens, amygdala, caudate, hippocampus, pallidum, putamen, thalamus, cerebellum) and 1 central structure (brainstem).

These structures can be useful for advanced source modeling, but will not be used in the introduction tutorials. Please refer to the advanced tutorials for more information: Volume source estimation and Deep cerebral structures.

With the button [Struct] at the bottom of the Surface tab, you can see the structures separately.

Registration MRI/surfaces

The MRI and the surfaces are represented using the different coordinate systems and could be misregistered for various reasons. If you are using the automated segmentation pipeline from FreeSurfer or BrainSuite you should never have any problem, but if something goes wrong or in the case of more manual import procedures it is always good to check that the MRI and the surfaces are correctly aligned.

Right-click on the low-res cortex > MRI Registration > Check MRI/surface registration

- The calculation of the interpolation between the MRI and the cortex surface takes a few seconds, but the result is then saved in the database and will be reused later.

The yellow lines represent the re-interpolation of the surface in the MRI volume.

Interaction with the file system

For most manipulations, it is not necessary to know exactly what is going on at the level of the file system, in the Brainstorm database directory. However, many things are not accessible from the Brainstorm interface, you may sometimes find it useful to manipulate some piece of data directly from the Matlab command window.

Where are the files ?

- Leave your mouse for a few seconds over any node in the database explorer, a tooltip will appear with the name and path of the corresponding file on the hard drive.

Paths are relative to current protocol path (brainstorm_db/TutorialIntroduction). What is displayed in the Brainstorm window is a comment and may have nothing to do with the real file name. For instance, the file name corresponding to "head mask" is Subjec01/tess_head_mask.mat.

Almost all the files in the database are in Matlab .mat format. You can load and edit them easily in the Matlab environment, where they appear as structures with several fields.

Popup menu: File

Right-click on a surface file: many menus can lead you to the files and their contents.

View file contents: Display all the fields in the Matlab .mat file.

View file history: Review the History field in the file, that records all the operations that were performed on the file since if was imported in Brainstorm.

Export to file: Export in one of the supported mesh file format.

Export to Matlab: Load the contents of the .mat file in the Matlab base workspace. It is then accessible from the Matlab command window.

Import from Matlab: Replace the selected file with the content of a variable from the Matlab base workspace. Useful to save back in the database a structure that was exported and modified manually with the Matlab command window.

Copy / Cut / Paste: Allow you to copy/move files in the database explorer. Keyboard shortcuts for these menus are the standard Windows shortcuts (Ctrl+C, Ctrl+X, Ctrl+V). The database explorer also supports drag-and-drop operations for moving files between different folders.

Delete: Delete a file. Keyboard shortcuts: Delete key.

Rename: Change the Comment field in the file. It "renames" the file in the database explorer, but does not change the actual file name on the hard drive. Keyboard shortcut: F2

Copy file path to clipboard: Copies the full file name into the system clipboard, so that you can paste it in any other window (Ctrl+V or Paste menu)

Go to this directory (Matlab): Change the current Matlab path, so that you can access the file from the Matlab Command window or the Matlab Current directory window

Show in file explorer: Open a file explorer window in this directory.

Open terminal in this folder: Start a system console in the file directory (Linux and MacOS only).

What are all these other files ?

If you look in brainstorm_db/TutorialIntroduction with the file explorer of your operating system, you'll find many other directories and files that are not visible in the database explorer.

The protocol TutorialIntroduction is divided in Anatomy and Datasets directories:

Each subject in anat is described by an extra file: brainstormsubject.mat

Each folder in data is described by an extra file: brainstormstudy.mat

anat/@default_subject: Contains the files of the default anatomy (Default anatomy)

data/@default_study: Files shared between different subjects (Global common files)

data/@inter: Results of inter-subject analysis

data/Subject01/@default_study: Files shared between different folders in Subject01

data/Subject01/@intra: Results of intra-subject analysis (across different folders)

On the hard drive: MRI

Right-click on the MRI > File > View file contents:

Structure of the MRI files: subjectimage_*.mat

Comment: String displayed in the database explorer to represent the file.

Cube: [Nsagittal x Ncoronal x Naxial] full MRI volume. Cube(1,1,1) is in the left, posterior, inferior corner.

Voxsize: Size of one voxel in millimeters (sagittal, coronal, axial).

SCS: Defines the Subject Coordinate System. Points below are in MRI RAS coordinate (in millimeters, i.e., voxel_number * voxel_size) coordinates. In Brainstorm voxel numbers are 1-indexed.

NAS: (x,y,z) coordinates of the nasion fiducial.

LPA: (x,y,z) coordinates of the left ear fiducial.

RPA: (x,y,z) coordinates of the right ear fiducial.

R: [3x3] rotation matrix from MRI coordinates to SCS coordinates.

T: [3x1] translation matrix from MRI coordinates to SCS coordinates.

Origin: MRI coordinates of the point with SCS coordinates (0,0,0).

NCS: Defines the MNI coordinate system, either with a linear or a non-linear transformation.

AC: (x,y,z) coordinates of the Anterior Commissure.

PC: (x,y,z) coordinates of the Posterior Commissure.

IH: (x,y,z) coordinates of an Inter-Hemisperic point.

- (Linear transformation)

R: [3x3] rotation matrix from MRI coordinates to MNI coordinates.

T: [3x1] translation matrix from MRI coordinates to MNI coordinates.

- (Non-linear transformation)

iy: 3D floating point matrix: Inverse MNI deformation field, as in SPM naming conventions. Same size as the Cube matrix, it gives for each voxel its coordinates in the MNI space, and is therefore used to convert from MRI coordinates to MNI coordinates.

y: 3D floating point matrix: Forward MNI deformation field, as in SPM naming conventions. For some MNI coordinates, it gives their coorrespondance in the original MRI space. To be interpreted, it has to be used with the matrix y_vox2ras.

y_vox2ras: [4x4 double], transformation matrix that converts from voxel coordinates of the y volume to MNI coordinates.

y_method: Algorithm used for computing the normalization ('segment'=SPM12 Segment)

Origin: MRI coordinates of the point with NCS coordinates (0,0,0).

Header: Header from the original file format (.nii, .mgz, ...)

Histogram: Result of the internal analysis of the MRI histogram, mainly to detect background level.

InitTransf: [Ntransform x 2] cell-matrix: Transformations that are applied to the MRI before importing the surfaces. Example: {'vox2ras', [4x4 double]}

Labels: [Nlabels x 3] cell-matrix: For anatomical parcellations, this field contains the names and RGB colors associated with each integer label in the volume. Example:

{0, 'Background', [0 0 0]}

{1, 'Precentral L', [203 142 203]}History: List of operations performed on this file (menu File > View file history).

Useful functions

/toolbox/io/in_mri_bst(MriFile): Read a Brainstorm MRI file and compute the missing fields.

/toolbox/io/in_mri(MriFile, FileFormat=[]): Read a MRI file (format is auto-detected).

/toolbox/io/in_mri_*.m: Low-level functions for reading all the file formats.

/toolbox/anatomy/mri_*.m: Routines for manipulating MRI volumes.

/toolbox/gui/view_mri(MriFile, ...): Display an imported MRI in the MRI viewer.

/toolbox/gui/view_mri_3d(MriFile, ...): Display an imported MRI in a 3D figure.

On the hard drive: Surface

Right-click on any cortex surface > File > View file contents:

Structure of the surface files: tess_*.mat

Atlas: Array of structures, each entry is one menu in the drop-down list in the Scout tab.

Name: Label of the atlas (reserved names: "User scouts", "Structures", "Source model")

Scouts: List of regions of interest in this atlas, see the Scout tutorial.

Comment: String displayed in the database explorer to represent the file.

Curvature: [Nvertices x 1], curvature value at each point.

Faces: [Nfaces x 3], triangles constituting the surface mesh.

History: List of operations performed on this file (menu File > View file history).

iAtlas: Index of the atlas that is currently selected for this surface.

Reg: Structure with registration information, used to interpolate the subject's maps on a template.

Sphere.Vertices: Location of the surface vertices on the FreeSurfer registered spheres.

Square.Vertices: Location of the surface vertices in the BrainSuite atlas.

AtlasSquare.Vertices: Corresponding vertices in the high-resolution BrainSuite atlas.

SulciMap: [Nvertices x 1], binary mask marking the botton of the sulci (1=displayed as darker).

tess2mri_interp: [Nvoxels x Nvertices] sparse interpolation matrix MRI<=>surface.

VertConn: [Nvertices x Nvertices] Sparse adjacency matrix, VertConn(i,j)=1 if i and j are neighbors.

Vertices: [Nvertices x 3], coordinates (x,y,z) of all the points of the surface, in SCS coordinates.

VertNormals: [Nvertices x 3], direction (x,y,z) of the normal to the surface at each vertex.

Useful functions

/toolbox/io/in_tess_bst(SurfaceFile): Read a Brainstorm surface file and compute the missing fields.

/toolbox/io/in_tess(TessFile, FileFormat=[], sMri=[]): Read a surface file (format is auto-detected).

/toolbox/io/in_tess_*.m: Low-level functions for reading all the file formats.

/toolbox/anatomy/tess_*.m: Routines for manipulating surfaces.

/toolbox/gui/view_surface(SurfaceFile, ...): Display an imported surface in a 3D figure.

/toolbox/gui/view_surface_data(SurfaceFile, OverlayFile, ...): Display a surface with a source map.

/toolbox/gui/view_surface_matrix(Vertices, Faces, ...): Display a mesh in a 3D figure.

Tutorial 4: Channel file / MEG-MRI coregistration

Authors: Francois Tadel, Elizabeth Bock, Sylvain Baillet

The anatomy of your subject is ready. Before we can start looking at the MEG/EEG recordings, we need to make sure that the sensors (electrodes, magnetometers or gradiometers) are properly aligned with the MRI and the surfaces of the subject.

In this tutorial, we will start with a detailed description of the experiment and the files that were recorded, then we will link the original CTF files to the database in order to get access to the sensors positions, and finally we will explore the various options for aligning these sensors on the head of the subject.

Contents

License

This dataset (MEG and MRI data) was collected by the MEG Unit Lab, McConnell Brain Imaging Center, Montreal Neurological Institute, McGill University, Canada. The original purpose was to serve as a tutorial data example for the Brainstorm software project. It is presently released in the Public Domain, and is not subject to copyright in any jurisdiction.

We would appreciate though that you reference this dataset in your publications: please acknowledge its authors (Elizabeth Bock, Peter Donhauser, Francois Tadel and Sylvain Baillet) and cite the Brainstorm project seminal publication.

Presentation of the experiment

Experiment

- One subject, two acquisition runs of 6 minutes each.

- Subject stimulated binaurally with intra-aural earphones (air tubes+transducers), eyes opened and looking at a fixation cross on a screen.

- Each run contains:

- 200 regular beeps (440Hz).

- 40 easy deviant beeps (554.4Hz, 4 semitones higher).

- Random inter-stimulus interval: between 0.7s and 1.7s seconds, uniformly distributed.

- The subject presses a button when detecting a deviant with the right index finger.

- Auditory stimuli generated with the Matlab Psychophysics toolbox.

The specifications of this dataset were discussed initially on the FieldTrip bug tracker:

http://bugzilla.fieldtriptoolbox.org/show_bug.cgi?id=2300.

MEG acquisition

Acquisition at 2400Hz, with a CTF 275 system, subject in sitting position

- Recorded at the Montreal Neurological Institute in December 2013

- Anti-aliasing low-pass filter at 600Hz, files saved with the 3rd order gradient

Downsampled at a lower sampling rate: from 2400Hz to 600Hz: The only purpose for this resampling is to make the introduction tutorials easier to follow the on a regular computer.

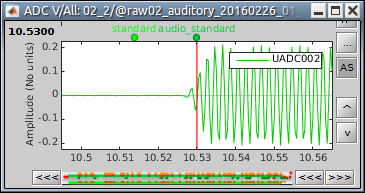

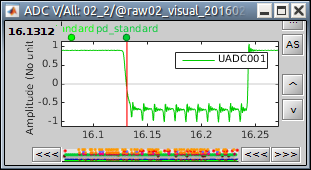

- Recorded channels (340):

- 1 Stim channel indicating the presentation times of the audio stimuli: UPPT001 (#1)

- 1 Audio signal sent to the subject: UADC001 (#316)

- 1 Response channel recordings the finger taps in response to the deviants: UDIO001 (#2)

- 26 MEG reference sensors (#5-#30)

- 274 MEG axial gradiometers (#31-#304)

- 2 EEG electrodes: Cz, Pz (#305 and #306)

- 1 ECG bipolar (#307)

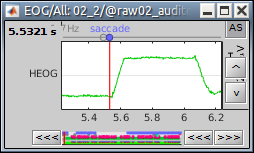

- 2 EOG bipolar (vertical #308, horizontal #309)

- 12 Head tracking channels: Nasion XYZ, Left XYZ, Right XYZ, Error N/L/R (#317-#328)

- 20 Unused channels (#3, #4, #310-#315, #329-340)

- 3 datasets:

S01_AEF_20131218_01_600Hz.ds: Run #1, 360s, 200 standard + 40 deviants

S01_AEF_20131218_02_600Hz.ds: Run #2, 360s, 200 standard + 40 deviants

S01_Noise_20131218_02_600Hz.ds: Empty room recordings, 30s long

- Average reaction times for the button press after a deviant tone:

Run #1: 515ms +/- 108ms

Run #2: 596ms +/- 134ms

Head shape and fiducial points

3D digitization using a Polhemus Fastrak device driven by Brainstorm (S01_20131218_01.pos)

More information: Digitize EEG electrodes and head shape

- The output file is copied to each .ds folder and contains the following entries:

- The position of the center of CTF coils.

The position of the anatomical references we use in Brainstorm:

Nasion and connections tragus/helix, as illustrated here.- Around 150 head points distributed on the hard parts of the head (no soft tissues).

Link the raw files to the database

Switch to the "functional data" view.

Right-click on the subject folder > Review raw file

Select the file format: "MEG/EEG: CTF (*.ds...)"

Select all the .ds folders in: sample_introduction/data

- In the CTF file format, each session of recordings is saved in a folder with the extension "ds". The different types of information collected during each session are saved as different files in this folder (event markers, sensor definitions, bad segments, MEG recordings).

Refine registration now? YES

This operation is detailed in the next section.

Percentage of head points to ignore: 0

If you have some points that were not digitized correctly and that appear far from the head surface, you should increase this value in order to exclude them from the fit.

Automatic registration

The registration between the MRI and the MEG (or EEG) is done in two steps. We start with a first approximation based on three reference points, then we refine it with the full head shape of the subject.

Step 1: Fiducials

The initial registration is based on the three fiducial points that define the Subject Coordinate System (SCS): nasion, left ear, right ear. You have marked these three points in the MRI viewer in the previous tutorial.

These same three points have also been marked before the acquisition of the MEG recordings. The person who recorded this subject digitized their positions with a tracking device (such as a Polhemus FastTrak or Patriot). The position of these points are saved in the dataset.

- When we bring the MEG recordings into the Brainstorm database, we align them on the MRI using these fiducial points: we match the NAS/LPA/RPA points digitized with the ones we located in the MRI Viewer.

This registration method gives approximate results. It can be good enough in some cases, but not always because of the imprecision of the measures. The tracking system is not always very precise, the points are not always easy to identify on the MRI slides, and the very definition of these points does not offer a millimeter precision. All this combined, it is easy to end with an registration error of 1cm or more.

The quality of the source analysis we will perform later is highly dependent on the quality of the registration between the sensors and the anatomy. If we start with a 1cm error, this error will be propagated everywhere in the analysis.

Step 2: Head shape

To improve this registration, we recommend our users to always digitize additional points on the head of the subjects: around 100 points uniformly distributed on the hard parts of the head (skull from nasion to inion, eyebrows, ear contour, nose crest). Avoid marking points on the softer parts (cheeks or neck) because they may have a different shape when the subject is seated on the Polhemus chair or lying down in the MRI. More information on digitizing head points.

- We have two versions of the full head shape of the subject: one coming from the MRI (the head surface, represented in grey in the figures below) and one coming from the Polhemus digitizer at the time of the MEG/EEG acquisition (represented as green dots).

The algorithm that is executed when you chose the option "Refine registration with head points" is an iterative algorithm that tries to find a better fit between the two head shapes (grey surface and green dots), to improve the intial NAS/LPA/RPA registration. This technique usually improves significantly the registration between the MRI and the MEG/EEG sensors.

- Tolerance: If you enter a percentage of head points to ignore superior to zero, the fit is performed once with all the points, then the head points the most distant to the cortex are removed, and the fit is executed a second time with the head points that are left.

- The two pictures below represent the registration before and after this automatic head shape registration (left=step 1, right=step 2). The yellow surface represents the MEG helmet: the solid plastic surface in which the subject places his/her head. If you ever see the grey head surface intersecting this yellow helmet surface, there is obviously something wrong with the registration.

At the end of the import process, you can close the figure that shows the final registration.

A window reporting the distance between the scalp and the head points is displayed. You can use these values as references for estimating whether you can trust the automatic registration or not. Defining whether the distances are correct or abnormal depend on your digitization setup.

Defaced volumes

When processing your own datasets, if your MRI images are defaced, you might need to proceed in a slightly different way. The de-identification procedures remove the nose and other facial features from the MRI. If your digitized head shape includes points on the missing parts of the head, this may cause an important bias in automatic registration. In this case it is advised to remove the head points below the nasion before proceeding to the automatic registration, as illustrated in this tutorial.

New files and folders

Many new files are now visible in the database explorer:

- Three folders representing the three MEG datasets that we linked to the database. Note the tag "raw" in the icon of the folders, this means that the files are considered as new continuous files.

S01_AEF_20131218_01_600Hz: Subject01, Auditory Evoked Field, 18-Dec-2013, run #01

S01_AEF_20131218_02_600Hz: Subject01, Auditory Evoked Field, 18-Dec-2013, run #02

S01_Noise_20131218_02_600Hz: Subject01, Noise recordings (no subject in the MEG)

- All three have been downsampled from 2400Hz to 600Hz.

Each of these new folders show two elements:

Channel file: Defines the types and names of channels that were recorded, the position of the sensors, the head shape and other various details. This information has been read from the MEG datasets and saved as a new file in the database. The total number of data channels recorded in the file is indicated between parenthesis (340).

Link to raw file: Link to the original file that you imported. All the relevant meta-data was read from the MEG dataset and copied inside the link itself (sampling rate, number of samples, event markers and other details about the acquisition session). But no MEG/EEG recordings were read or copied to the database. If we open this file, the values are read directly from the original files in the .ds folder.

Review vs Import

When trying to bring external data into the Brainstorm environment, a common source of confusion is the difference between the two popup menus Review and Import:

Review raw file: Allows you to create a link to your original continuous data file. It reads the header and sensor information from the file but does not copy the recordings in the database. Most of the artifact cleaning should be done directly using these links.

Import MEG/EEG: Extract segments of recordings (epochs) from an external file and saves copies of them in the Brainstorm database. You should not be using this menu until you have fully pre-processed your recordings, or if you are importing files that are already epoched or averaged.

Display the sensors

Right-click on the CTF channels file and try all the display menus:

CTF Helmet: Shows a surface that represents the inner surface of the MEG helmet.

CTF coils (MEG): Display the MEG head coils of this CTF system: they are all axial gradiometers, only the coils close to the head are represented. The small squares do not represent the real shape of the sensors (the CTF coils are circular loops) but an approximation made in the forward model computation.

CTF coils (ALL): Display all the MEG sensors, including the reference magnetometers and gradiometers. The orientation of the coils is represented with a red segment.

MEG: MEG sensors are represented as small white dots and can be selected by clicking on them.

ECG / EOG: Ignore these menus, we do not have proper positions for these electrodes.

Misc: Shows the approximate positions of the EEG electrodes (Cz and Pz).

Use the [Close all] button to close all the figures when you are done.

Sensor map

Here is a map with the full list of sensor names for this CTF system, it could be useful for navigating in the recordings. Click on the image for a larger version.

Manual registration

If the registration you get with the automatic alignment is incorrect, or if there was an issue when you digitized the position of the fiducials or the head shape, you may have to realign manually the sensors on the head. Right-click on the channel file > MRI Registration:

Check: Show all the possible information that may help to verify the registration.

Edit: Opens a window where you can move manually the MEG helmet relative to the head.

Read the tooltips of the buttons in the toolbar to see what is available, select an operation and then right-click+move up/down to apply it. From a scientific point of view this is not exactly a rigorous operation, but sometimes it is much better than using wrong default positions.

IMPORTANT: this refinement can only be used to better align the headshape with the digitized points - it cannot be used to correct for a subject who is poorly positioned in the helmet (i.e. you cannot move the helmet closer to the subjects head if they were not seated that way to begin with!)

Refine using head points: Runs the automatic registration described earlier.

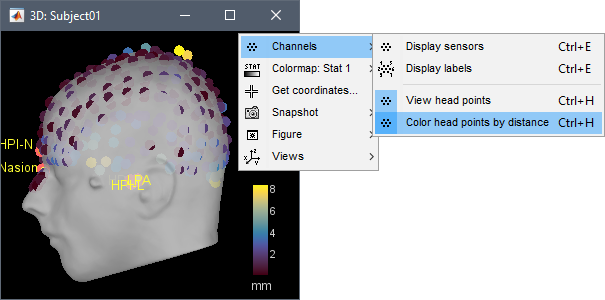

In the 3D views, the head points can be color-coded to represent the distance to the scalp. Right-click on the figure > Channels > Color head points by distance (shortcut CTRL+H). The colorbar indicates in millimeters the distance of each point to the scalp, as compute by bst_surfdist.m.

There is nothing to change here, but remember to always check the registration scalp/sensors.

Multiple runs and head positions

Between two acquisition runs the subject may move in the MEG helmet, the relative position of the MEG sensors with the head surface changes. At the beginning of each MEG run, the positions of the head localization coils are detected and used to update the position of the MEG sensors.

- The two AEF runs 01 and 02 were acquired successively. The position of the subject's head in the MEG helmet was estimated twice, once at the beginning of each run.

To evaluate visually the displacement between the two runs, select at the same time all the channel files you want to compare (the ones for run 01 and 02), right-click > Display sensors > MEG.

- Typically, we would like to group the trials coming from multiple acquisition runs. However, because of the subject's movements between runs, it is usually not possible to directly compare the MEG values between runs. The sensors may not capture the activity coming from the same regions of the brain.

- You have three options if you consider grouping information from multiple runs:

Method 1: Process all the runs separately and average between runs at the source level: The more accurate option, but requires more work, computation time and storage.

Method 2: Ignore movements between runs: This can be acceptable if the displacements are really minimal, less accurate but much faster to process and easier to manipulate.

Method 3: Co-register properly the runs using the process Standardize > Co-register MEG runs: Can be a good option for displacements under 2cm.

Warning: This method has not be been fully evaluated on our side, use at your own risk. Also, it does not work correctly if you have different SSP projectors calculated for multiple runs.

- In this tutorial, we will illustrate only method 1: runs are not co-registered.

Edit the channel file

Display a table with all the information about the individual channels. You can edit all the values.

Right-click on the channel of the first folder (AEF#01) > Edit channel file:

Index: Index of the channel in the data matrix. Can be edited to reorder the channels.

Name: Name that was given to the channel by the acquisition device.

Type: Type of information recordeded (MEG, EEG, EOG, ECG, EMG, Stim, Other, "Delete", etc)

- You may have to change the Type for some channels. For instance if an EOG channel was saved as a regular EEG channel, you have to change its type to prevent it from being used in the source estimation.

- To delete a channel from this file: select "(Delete)" in the type column.

Group: Used to define sub-group of channels of the same type.

- SEEG/ECOG: Each group of contacts can represent a depth electrode or a grid, and it can be plotted separately. A separate average reference montage is calculated for each group.

- MEG/EEG: Not used.

Comment: Additional description of the channel.

- MEG sensors: Do not edit this information if it is not empty.

Loc: Position of the sensor (x,y,z) in SCS coordinates. Do not modify this from the interface.

One column per coil and per integration point (information useful for the forward modeling).Orient: Orientation of the MEG coils (x,y,z) in SCS coordinates). One column per Loc column.

Weight: When there is more than one coil or integration point, the Weight field indicates the multiplication factor to apply to each of these points.

- To edit the type or the comment for multiple sensors at once, select them all then right-click.

- Close this figure, do not save the modifications if you made any.

On the hard drive

Some other fields are present in the channel file that cannot be accessed with the Channel editor window. You can explore these other fields with the File menu, selecting View file contents or Export to Matlab, as presented in the previous tutorial.

Structure of the channel files: channel_*.mat

Comment : String that is displayed in the Brainstorm database explorer.

MegRefCoef: Noise compensation matrix for CTF and 4D MEG recordings, based on some other sensors that are located far away from the head.

Projector: SSP/ICA projectors used for artifact cleaning purposes. See the SSP tutorial.

TransfMeg / TransfMegLabel: Transformations that were applied to the positions of the MEG sensors to bring them in the Brainstorm coordinate system.

TransfEeg / TransfEegLabel: Same for the position of the EEG electrodes.

HeadPoints: Extra head points that were digitized with a tracking system.

Channel: An array that defines each channel individually (see previous section).

Clusters: An array of structures that defines channels of clusters, with the following fields:

Sensors: Cell-array of channel names

Label: String, name of the cluster

Color: RGB values between 0 and 1 [R,G,B]

Function: String, cluster function name (deault: 'Mean')

History: Describes all the operations that were performed with Brainstorm on this file. To get a better view of this piece of information, use the menu File > View file history.

IntraElectrodes: Definition of iEEG devices, documented in the SEEG tutorial.

Useful functions

/toolbox/io/import_channel.m: Read a channel file and save it in the database.

/toolbox/io/in_channel_*.m: Low-level functions for reading all the file formats.

/toolbox/io/in_bst_channel.m: Read a channel file saved in the database.

/toolbox/sensors/channel_*.m: Routines for manipulating channel files.

/toolbox/gui/view_channels(ChannelFile, Modality, ...): Display the sensors in a 3D figure.

Additional documentation

Forum: Import the position of SEEG/ECOG contacts: Post #2206, Post #1958, Post #2357

Tutorial 5: Review continuous recordings

Authors: Francois Tadel, Elizabeth Bock, John C Mosher, Sylvain Baillet

Contents

Open the recordings

Let's look at the first file in the list: AEF#01.

Right-click on the Link to raw file. Below the first to menus, you have the list of channel types:

MEG: 274 axial gradiometers

ECG: 1 electrocadiogram, bipolar electrode across the chest

EOG: 2 electrooculograms (vertical and horizontal)

Misc: EEG electrodes Cz and Pz

ADC A: Unused

ADC V: Auditory signal sent to the subject

DAC: Unused

FitErr: Fitting error when trying to localize the three head localization coils (NAS, LPA, RPA)

HLU: Head Localizing Unit, displacements in the three directions (x,y,z) for the three coils

MEG REF: 26 reference sensors used for removing the environmental noise

Other: Unused

Stim: Stimulation channel, records the stim triggers generated by the Psychophysics toolbox and other input channels, such as button presses generated by the subject

SysClock: System clock, unused

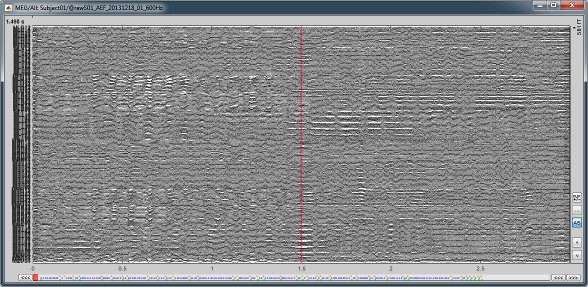

Select > MEG > Display time series (or double-click on the file).

It will open a new figure and enable many controls in the Brainstorm window.

Navigate in time

The files we have imported here are shown the way they have been saved by the CTF MEG system: as contiguous epochs of 1 second each. These epochs are not related with the stimulus triggers or the subject's responses, they are just a way of saving the files. We will first explore the recordings in this epoched mode before switching to the continuous mode.

From the time series figure

Click: Click on the white or grey parts of figure to move the time cursor (red vertical line).

If you click on the signals, it selects the corresponding channels. Click again to unselect.Shortcuts: See the tooltips in the time panel for important keyboard shortcuts:

Left arrow, right arrow, page up, page down, F3, Shift+F3, etc...Bottom bar: The red square in the bottom bar represents the portion of the file that is currently displayed from the current file or epoch. Right now we show all the epoch #1. This will be more useful in the continuous mode.

Zoom: Scroll to zoom horizontally around the time cursor (mouse wheel or two-finger up/down).

[<<<] and [>>>]: Previous/next epoch or page

From the time panel

Time: [0, 998]ms is the time segment over which the first epoch is defined.

Sampling: We downsampled these files to 600Hz for easier processing in the tutorials.

Text box: Current time, can be edited manually.

[<] and [>]: Previous/next time sample - Read the tooltip for details and shortcuts

[<<] and [>>]: Previous/next time sample (x10) - Read the tooltip for details and shortcuts

[<<<] and [>>>]: Previous/next epoch or page - Read the tooltip for details and shortcuts

From the page settings

Epoch: Selects the current time block that is displayed in the time series figure.

Start: Starting point of the time segment displayed in the figure. Useful in continuous mode only.

Duration: Length of this time segment. Useful in continuous mode only.

Time selection

- In the time series figure, click and drag your mouse for selecting a time segment.

- At the bottom of the figure, you will see the duration of the selected block, and min/max values.

- Useful for quickly estimating the latencies between two events, or the period of an oscillation.

To zoom into the selection: Shift+left click, middle click, or right-click > Time selection > Zoom into.

Click anywhere on the figure to cancel this time selection.

Epoched vs. continuous

- The CTF MEG system can save two types of files: epoched (.ds) or continuous (_AUX.ds).

- Here we have an intermediate storage type: continuous recordings saved in "epoched" files. The files are saved as small blocks of recordings of a constant time length (1 second in this case). All these time blocks are contiguous, there is no gap between them.

- Brainstorm can consider this file either as a continuous or an epoched file. By default it imports the regular .ds folders as epoched, but we need to change this manually.

Right-click on the "Link to raw file" for AEF#01 > Switch epoched/continuous

You should get a message: "File converted to: continuous".Double-click on the "Link to raw file" again. Now you can navigate in the file without interruptions. The box "Epoch" is disabled and all the events in the file are displayed at once.

With the red square at the bottom of the figure, you can navigate in time (click in the middle and drag with the mouse) or change the size of the current page (click on the left or right edge of the red square and move your mouse).

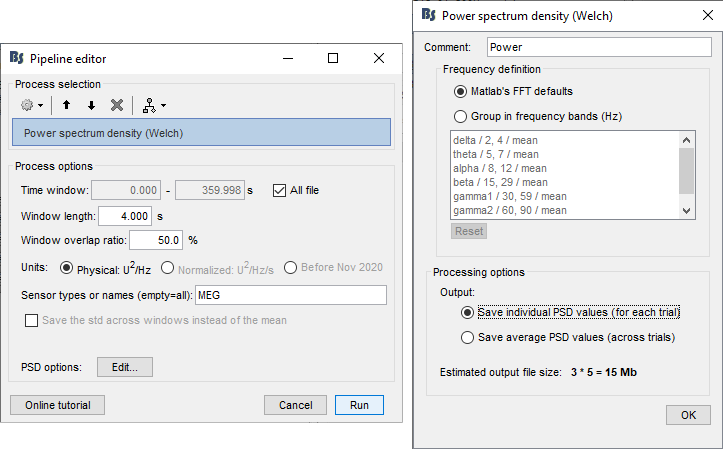

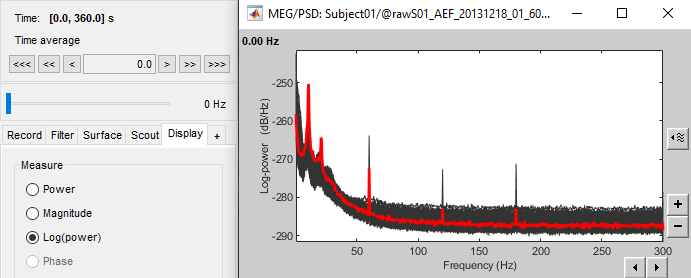

Increase the duration of the displayed window to 3 seconds (Page settings > Duration).