|

Size: 9131

Comment:

|

Size: 10215

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| = Tutorial 13: Decoding Conditions = ''Author: Seyed-Mahdi Khaligh-Razavi'' |

= Machine learning: Decoding / MVPA = ''Authors: Dimitrios Pantazis''', '''Seyed-Mahdi Khaligh-Razavi, Francois Tadel, '' |

| Line 4: | Line 4: |

| These set of functions allow you to do support vector machine (SVM) and linear discriminant analysis (LDA) classification on your MEG data across time. | This tutorial illustrates how to run MEG decoding (a type of multivariate pattern analysis / MVPA) using support vector machines (SVM). |

| Line 8: | Line 8: |

| == Description of the MEG data == The data contains the first 15 minutes of MEG recording for one of the subjects in the following study. The study was conducted according to the Declaration of Helsinki and approved by the local ethics committee (Institutional Review Board of the Massachusetts Institute of Technology). Informed consent was obtained from all participants. During MEG recordings participants completed an orthogonal image categorization task. Participants saw 720 stimuli (360 faces, 360 scenes) centrally-presented ( 6 degree visual angle) for 0.5 second at a time and had to decide (without responding) if scenes were indoor / outdoor and faces were male / female. Every 2 to 4 trials (randomly determined), a question mark would appear on the screen for 1 second. At this time, participants were asked to press a button to indicate the category of the last image (male/female, indoor/outdoor), and they were also allowed to blink/swallow. This task was designed to ensure participants were attentive to the images without explicitly encoding them into memory. Participants only responded during the “question mark” trials so that activity during stimulus perception was not contaminated by motor activity. During the task, participants were asked to fixate on a cross centered on the screen and their eye movements were tracked, to ensure any results were not due to differential eye movement patterns. The whole experiment was divided into 16 runs. Each run contained 45 randomly selected images (each of them shown twice per run—not in succession), resulting in a total experiment time of about 50 min. The MEG data that is included here for demonstration purposes contains only the first 15 minutes of the whole session, during which 53 faces and 54 scenes have been observed. |

== License == To reference this dataset in your publications, please cite Cichy et al. (2014). |

| Line 12: | Line 12: |

| These set of functions allow you to do support vector machine (SVM) and linear discriminant analysis (LDA) classification on your MEG data across time.Input: the input is channel data from two conditions (e.g. condA and condB) across time. Number of samples per condition should be the same for both condA and condB. Each of them should at least contain two samples.Output: the output is a decoding curve across time, showing your decoding accuracy (decoding condA vs. condB) at timepoint 't'. We have two condition types: faces, and scenes. We want to decode faces vs. scenes using 306 MEG channels. In the data, the faces are named as condition ‘201’; and the scenes are named as condition ‘203’. | Two decoding processes are available in Brainstorm: |

| Line 14: | Line 14: |

| == Making protocol and loading the data == Go to the file menu and create a new protocol called ''faces_vs_scenes ''with the options shown in the following figure. <<BR>><<BR>> |

* Decoding -> SVM classifier decoding * Decoding -> max-correlation classifier decoding |

| Line 17: | Line 17: |

| {{attachment:1-prot.png}} | These two processes work in a similar way, but they use a different classifier, so only SVM is demonstrated here. |

| Line 19: | Line 19: |

| <<BR>> | * '''Input''': the input is the time recording data from two conditions (e.g. condA and condB) across time. Number of samples per condition do not have to be the same for both condA and condB, but each of them should have enough samples to create k-folds (see parameter below). * '''Output''': the output is a decoding time course, or a temporal generalization matrix (train time x test time). * '''Classifier''': Two methods are offered for the classification of MEG recordings across time: support vector machine (SVM) and max-correlation classifier. |

| Line 21: | Line 23: |

| After making your protocol, go to the functional data view (sorted by subjects). Then create a new subject ''(let's keep the default name: Subject01)'': to create a new subject right click on the protocol name; then press new subject, choose the default values shown in the following figure; press save. | In the context of this tutorial, we have two condition types: faces, and objects. The participant was shown different types of images and we want to decode the face images vs. the object images using 306 MEG channels. |

| Line 23: | Line 25: |

| <<BR>><<BR>> | == Download and installation == * From the [[http://neuroimage.usc.edu/bst/download.php|Download]] page of this website, download the file 'sample_decoding.zip', and unzip to get the MEG file: '''subj04NN_sess01-0_tsss.fif''' * SVM decoding requires the LibSVM toolbox. The SVM decoding process tries to automatically install it, but should it fail here's how you install it manually: * [[https://www.csie.ntu.edu.tw/~cjlin/libsvm/#download|Download]] the libsvm ZIP file here. * Extract the content of the ZIP file to a permanent folder. * On Linux or Mac, you need to compile the library. Mac users can use XCode to do so. * On Windows, you can use windows subfolder of the zip file. * Add the path to the LibSVM folder in Matlab using addpath. |

| Line 25: | Line 34: |

| {{attachment:2_subj.png}} | * Start Brainstorm. * Select the menu File -> Create new protocol. Name it "'''TutorialDecoding'''" and select the options: * "'''Yes, use protocol's default anatomy'''", * "'''No, use one channel file per acquisition run'''". <<BR>><<BR>> {{attachment:1_create_new_protocol.jpg||width="400"}} |

| Line 27: | Line 39: |

| == Import MEG data == 1. Right click on the subject node (Subject01), and select ‘''review raw file’.'' 1. Change the file format to ‘''MEG/EEG: Neuromag FIF (*.fif)''’; then select the .fif file (''mem6.fif'') you downloaded. 1. Press event channels <<BR>> {{attachment:3_fif.png}} 1. In the ‘''mem6-0 tsss mc’'' node right click on ‘''Link to raw file''’; select ‘Import in database’. 1. Set epoch time to [-100, 1000] 1. Time range: [-100,0] 1. From the events select only these two events: 201 (faces), and 203(scenes) <<BR>> {{attachment:4_importfif.png}} 1. Then press import. You will get a message saying ‘some epochs are shorter than the others..’ . Press yes. |

== Import the recordings == * Go to the "functional data" view of the database (sorted by subjects). * Right-click on the TutorialDecoding folder -> New subject -> '''Subject01''' <<BR>>Leave the default options you defined for the protocol. * Right click on the subject node (Subject01) -> '''Review raw file'''''.'' <<BR>>Select the file format: "'''MEG/EEG: Neuromag FIFF (*.fif)'''"<<BR>>Select the file: '''subj04NN-sess01-0_tsss.fif''' <<BR>> * {{attachment:2_review_raw_file.jpg||width="440"}} <<BR>><<BR>> {{attachment:2_review_raw_file2.jpg||width="440"}} <<BR>><<BR>> * Select "Event channels" to read the triggers from the stimulus channel. <<BR>><<BR>> {{attachment:3_event_channel.jpg||width="320"}} * We will not pay attention to MEG/MRI registration because we are not going to compute any source models. The decoding is done on the sensor data. * Double click on the 'Link to raw file' to visualize the raw recordings. Event codes 13-24 indicate responses to face images, and we will combine them to a single group called 'faces'. To do so, select events 13-24 using SHIFT + click and from the menu select "'''Events -> Duplicate groups'''". Then select "'''Events -> Merge groups'''". The event codes are duplicated first so we do not lose the original 13-24 event codes. {{attachment:4_duplicate_groups_faces.jpg||width="500"}} <<BR>><<BR>> {{attachment:5_merge_groups_faces.jpg||width="500"}} * Event codes 49-59 indicate responses to object images, and we will combine them to a single group called 'objects'. To do so, select events 49-59 (SHIFT + click) and from the menu select Events -> Duplicate groups. Then select Events -> Merge groups. No screenshots are shown since this is similar to above. * We will now import the 'faces' and 'objects' responses to the database. Select "'''File -> Import in database"'''. <<BR>><<BR>> {{attachment:10_import_in_database.jpg||width="500"}} <<BR>><<BR>> * Select only two events: 'faces' and 'objects' * Epoch time: [-200, 800] ms * Remove DC offset: Time range: [-200, 0] ms * Do not create separate folders for each event type<<BR>> {{attachment:11_import_in_database_window.jpg||width="500"}} |

| Line 37: | Line 54: |

| == Decoding conditions == 1) Select ‘Process2’ from the bottom brainstorm window. 2) Drag and drop 40 files from folder ‘201’ to ‘Files A’; and 40 files from folder ‘203’ to ‘Files B’. You can select more than 40 or less. The important thing is that both ‘A’ and ‘B’ should have the same number of files. {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image011.png||height="344",width="492"}} |

== Select files == * Drag and drop all the face and object trials to the Process1 tab at the bottom of the Brainstorm window. * Intuitively, you might have expected to use the Process2 tab to decode faces vs. objects. But the decoding process is designed to also handle pairwise decoding of multiple classes (not just two classes) for computational efficiency, so more that two categories can be entered in the Process1 tab.<<BR>> {{attachment:12_select_files.jpg||width="400"}} |

| Line 40: | Line 58: |

| === a) Cross-validation === '''Cross'''-'''validation''' is a model '''validation''' technique for assessing how the results of our decoding analysis will generalize to an independent data set. 1) Select run -> decoding conditions -> classification with cross validation {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image013.png||height="204",width="434"}} Here, you will have three choices for cross-validation. If you have Matlab Statistics and Machine Learning Toolbox, you can use ‘Matlab SVM’ or ‘Matlab LDA’. You can also install the ‘LibSVM’ toolbox (https://www.csie.ntu.edu.tw/~cjlin/libsvm/ ); and addpath it to your Matlab session. LibSVM may be faster. The LibSVM cross-validation won’t be stratified. However, if you select Matlab SVM/LDA, it will do a k-fold stratified cross-validation for you, meaning that each fold will contain the same proportions of the two types of class labels. You can also set the number of folds for cross-validation; and the cut-off frequency for low-pass filtering – this is to smooth your data. 2) To continue, set the values as shown below: {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image015.png||height="272",width="267"}} ''' '''''' '''3) The process will take some time.The results are then saved in a file (‘Matlab SVM Decoding_201_203’) under the new ‘decoding’ node. If you double click on it you will see a decoding curve across time (shown below). {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image017.png||height="366",width="434"}} {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image019.png||height="202",width="390"}} The internal brainstorm plot is not perfect for this purpose. To make a proper plot you can plot the results yourself. Right click on the result file (Matlab SVM Decoding_201_203), > File -> export to Matlab. Give it a name ‘decodingcurve’. Then using Matlab plot function you can plot the decoding accuracies across time. |

== Decoding with cross-validation == '''Cross'''-'''validation''' is a model '''validation''' technique for assessing how the results of our decoding analysis will generalize to an independent data set. |

| Line 43: | Line 61: |

| ''Matlab code: '' | * Select process "'''Decoding > SVM decoding'''"<<BR>>'''Note:''' the SVM process requires the LibSVM toolbox, see installation instructions above. {{attachment:13_pipeline_editor_select_decoding.jpg||width="400"}} * Select 'MEG' for sensor types * Set 30 Hz for low-pass cutoff frequency. Equivalently, one could have applied a low-pass filters to the recordings and then run the decoding process. But this is a shortcut to apply a low-pass filter just for decoding without permanently altering the input recordings. * Select 100 for number of permutations. Alternatively use a smaller number for faster results. * Select 5 for number of k-folds * Select 'Pairwise' for decoding. Hint: if more that two classes were input to the Process1 tab, the decoding process will perform decoding separately for each possible pair of classes. It will return them in the same form as Matlab's 'squareform' function (i.e. lower triangular elements in columnwise order) * The decoding process follows a similar procedure as Pantazis et al. (2018). Namely, to reduce computational load and improve signal-to-noise ratio, we first randomly assign all trials (from each class) into k folds, and then subaverage all trials within each fold into a single trial, thus yielding a total of k subaveraged trials per class. Decoding then follows with a leave-one-out cross-validation procedure on the subavaraged trials. * For example, if we have two classes with 100 trials each, selecting 5 number of folds will randomly assign the 100 trials in 5 folds with 20 trials each. The process than will subaverage the 20 trials yielding 5 subaveraged trials for each class. <<BR>> {{attachment:14_svm_decoding_pairwise.jpg||width="380"}} |

| Line 45: | Line 70: |

| '''-figure; '' ''''' | * The process will take some time. The results are then saved in a file in the 'decoding' folder<<BR>> {{attachment:15_svm_decoding_pairwise_results.jpg||width="800"}} |

| Line 47: | Line 72: |

| ''-plot(decodingcurve.Value); ''' ''''' | * The resulting matrix gives you the decoding accuracy over time. Notice that there is no significant accuracy before about 80 ms, after which there is a very high accuracy (> 90%) between 100 to 300 ms. This tells us that the neural representation of the face and object images vary significantly, and if we wanted to analyse this further we could narrow our analysis at this time period. |

| Line 49: | Line 74: |

| '' {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image021.png||height="257",width="290"}} ''' ''''' | * For temporal generalization, repeat the above process but select 'Temporal Generalization'. * To evaluate the persistence of neural representations over time, the decoding procedure can be generalized across time by training the SVM classifier at a given time point t, as before, but testing across all other time points (Cichy et al., 2014; King and Dehaene, 2014; Isik et al., 2014). Intuitively, if representations are stable over time, the classifier should successfully discriminate signals not only at the trained time t, but also over extended periods of time.<<BR>> {{attachment:16_svm_decoding_temporalgeneralization.jpg||width="380"}} |

| Line 51: | Line 77: |

| === b) Permutation === '''''This is an iterative procedure. The training and test data for the SVM/LDA classifier are selected in each iteration by randomly permuting the samples and grouping them into bins of size n (you can select the trial bin sizes). In each iteration two samples (one from each condition) are left out for test. The rest of the data are used to train the classifier with. 1) Select run -> decoding conditions -> classification with permutation 2) Set the values as the following: ''''' |

* The process will take some time. The results are then saved in a file in the 'decoding' folder {{attachment:17_svm_decoding_temporalgeneralization_results.jpg||width="800"}} |

| Line 54: | Line 79: |

| '' {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image023.png||height="325",width="299"}} ''' ''''' | * This representation gives us a decoding (approx. symmetric) matrix highlighting time periods that are similar in neural representation in red. For example, early signals up until 200ms have a narrow diagonal, indicating highly transient visual representations. On the other hand, the extended diagonal (red blob) between 200 and 400 ms indicates a stable neural representation at this time. We notice a similar albeit slightly attenuated phenomenon between 400 and 800 ms. In sum, this visualization can help us identify time periods with transient and sustained neural representations. |

| Line 56: | Line 81: |

| '''''If the ‘Trial bin size’ is greater than 1, the training data will be randomly grouped into bins of the size you determine here. The samples within each bin are then averaged (we refer to this as sub-averaging); the classifier is then trained using the averaged samples. For example, if you have 40 faces and 40 scenes, and you set the trial bin size to 5; then for each condition you will have 8 bins each containing 5 samples. 7 bins from each condition will be used for training, and the two left-out bins (one face bin, one scene bin) will be used for testing the classifier performance. 3) The results are saved under the ‘decoding ’ node . If you selected ‘Matlab SVM’, the file name will be : ‘Matlab SVM Decoding-permutation_201_203’ . Double click on it. You will get the plot blow: ''''' | == References == 1. Cichy RM, Pantazis D, Oliva A (2014), [[http://www.nature.com/neuro/journal/v17/n3/full/nn.3635.html|Resolving human object recognition in space and time]], Nature Neuroscience, 17:455–462. 1. Guggenmos M, Sterzer P, Cichy RM (2018), [[https://doi.org/10.1016/j.neuroimage.2018.02.044|Multivariate pattern analysis for MEG: A comparison of dissimilarity measures]], NeuroImage, 173:434-447. 1. King JR, Dehaene S (2014), [[https://doi.org/10.1016/j.tics.2014.01.002|Characterizing the dynamics of mental representations: the temporal generalization method]], Trends in Cognitive Sciences, 18(4): 203-210 1. Isik L, Meyers EM, Leibo JZ, Poggio T, [[https://doi.org/10.1152/jn.00394.2013|The dynamics of invariant object recognition in the human visual system]], Journal of Neurophysiology, 111(1): 91-102 |

| Line 58: | Line 87: |

| '' {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image025.png||height="204",width="434"}} ''' ''''' | == Additional documentation == * Forum: Decoding in source space: http://neuroimage.usc.edu/forums/showthread.php?2719 |

| Line 60: | Line 90: |

| '''''This might not be very intuitive. You can export the decoding results into Matlab and plot it yourself. If you export the decoding results into Matlab, the imported structure will have two important fields: a) Value: this is the mean decoding accuracy across all permutations b) Std: this is the standard deviation across all permutations. If you plot the mean value (decodingcurve.Value), below is what you will get. You also have access to the standard deviation (decodingcurve.Std), in case you want to plot it. ''' {{file://localhost/Users/skhaligh/Library/Caches/TemporaryItems/msoclip/0/clip_image027.png||height="288",width="362"}} ''' ''''' | <<EmbedContent(http://neuroimage.usc.edu/bst/get_feedback.php?Tutorials/Decoding)>> |

Machine learning: Decoding / MVPA

Authors: Dimitrios Pantazis, Seyed-Mahdi Khaligh-Razavi, Francois Tadel,

This tutorial illustrates how to run MEG decoding (a type of multivariate pattern analysis / MVPA) using support vector machines (SVM).

Contents

License

To reference this dataset in your publications, please cite Cichy et al. (2014).

Description of the decoding functions

Two decoding processes are available in Brainstorm:

Decoding -> SVM classifier decoding

Decoding -> max-correlation classifier decoding

These two processes work in a similar way, but they use a different classifier, so only SVM is demonstrated here.

Input: the input is the time recording data from two conditions (e.g. condA and condB) across time. Number of samples per condition do not have to be the same for both condA and condB, but each of them should have enough samples to create k-folds (see parameter below).

Output: the output is a decoding time course, or a temporal generalization matrix (train time x test time).

Classifier: Two methods are offered for the classification of MEG recordings across time: support vector machine (SVM) and max-correlation classifier.

In the context of this tutorial, we have two condition types: faces, and objects. The participant was shown different types of images and we want to decode the face images vs. the object images using 306 MEG channels.

Download and installation

From the Download page of this website, download the file 'sample_decoding.zip', and unzip to get the MEG file: subj04NN_sess01-0_tsss.fif

- SVM decoding requires the LibSVM toolbox. The SVM decoding process tries to automatically install it, but should it fail here's how you install it manually:

Download the libsvm ZIP file here.

- Extract the content of the ZIP file to a permanent folder.

- On Linux or Mac, you need to compile the library. Mac users can use XCode to do so.

- On Windows, you can use windows subfolder of the zip file.

- Add the path to the LibSVM folder in Matlab using addpath.

- Start Brainstorm.

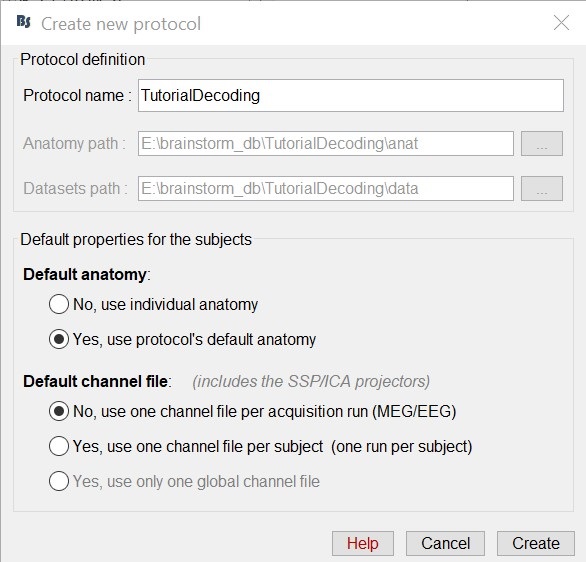

Select the menu File -> Create new protocol. Name it "TutorialDecoding" and select the options:

"Yes, use protocol's default anatomy",

"No, use one channel file per acquisition run".

Import the recordings

- Go to the "functional data" view of the database (sorted by subjects).

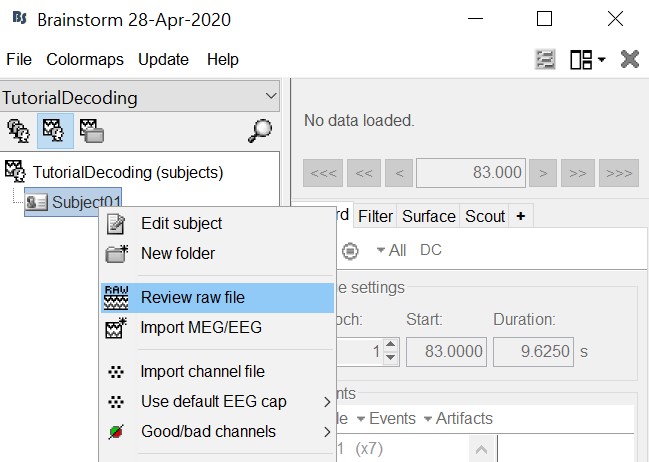

Right-click on the TutorialDecoding folder -> New subject -> Subject01

Leave the default options you defined for the protocol.Right click on the subject node (Subject01) -> Review raw file.

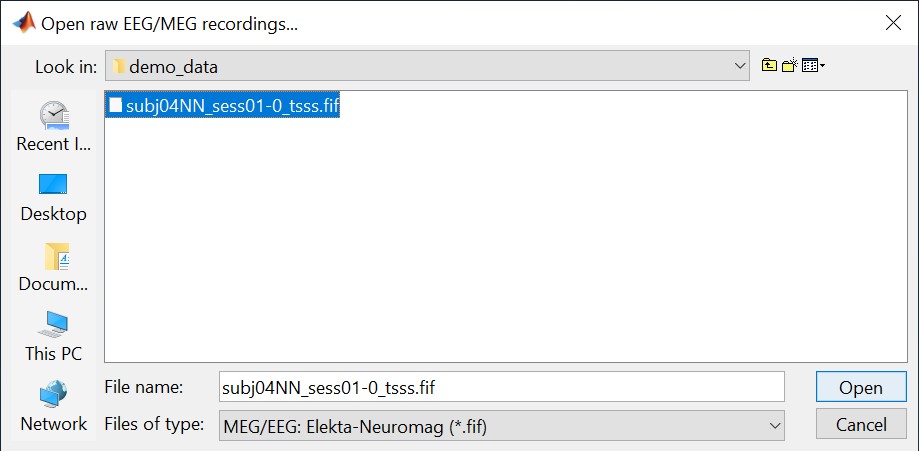

Select the file format: "MEG/EEG: Neuromag FIFF (*.fif)"

Select the file: subj04NN-sess01-0_tsss.fif

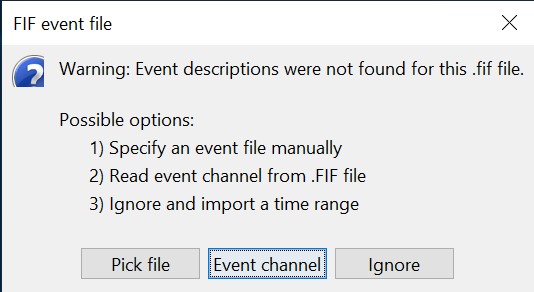

Select "Event channels" to read the triggers from the stimulus channel.

- We will not pay attention to MEG/MRI registration because we are not going to compute any source models. The decoding is done on the sensor data.

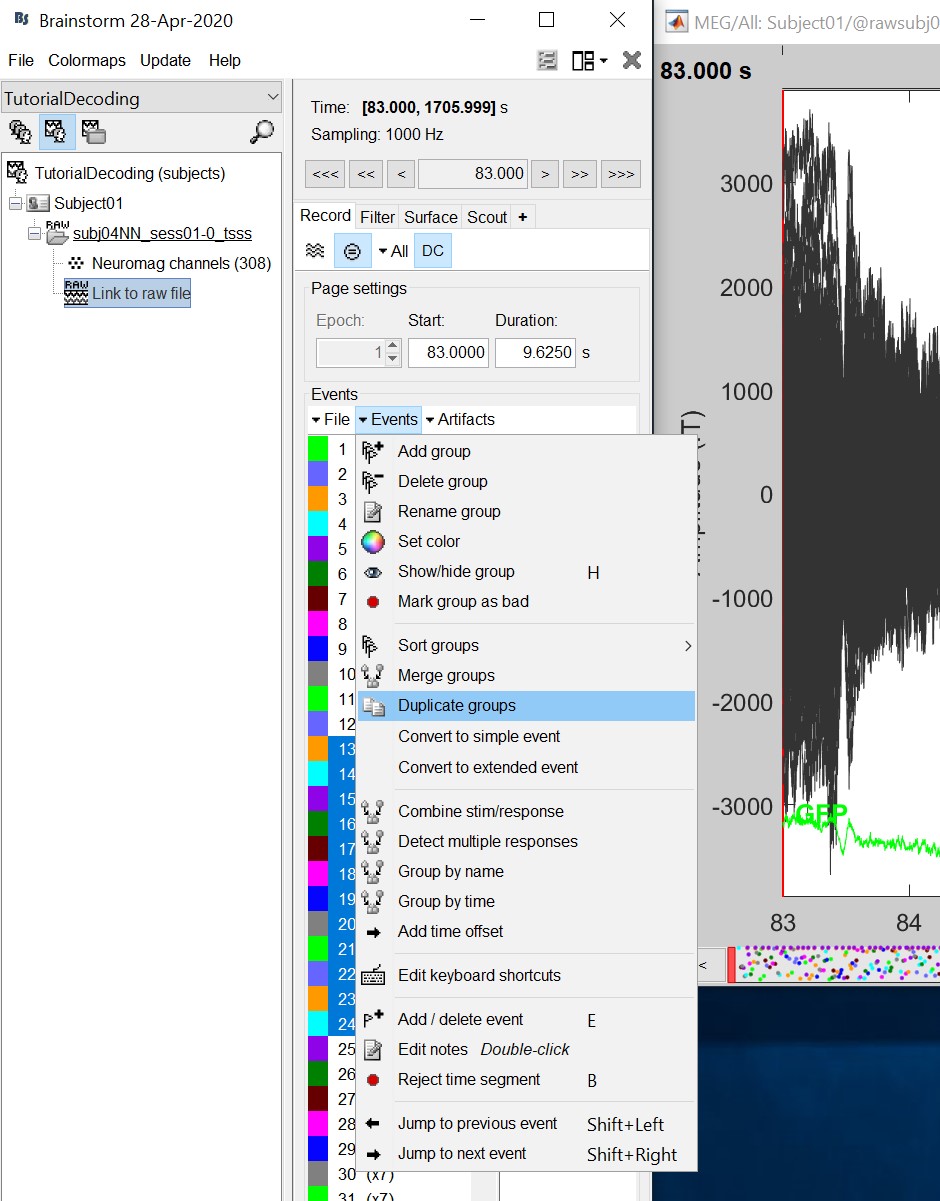

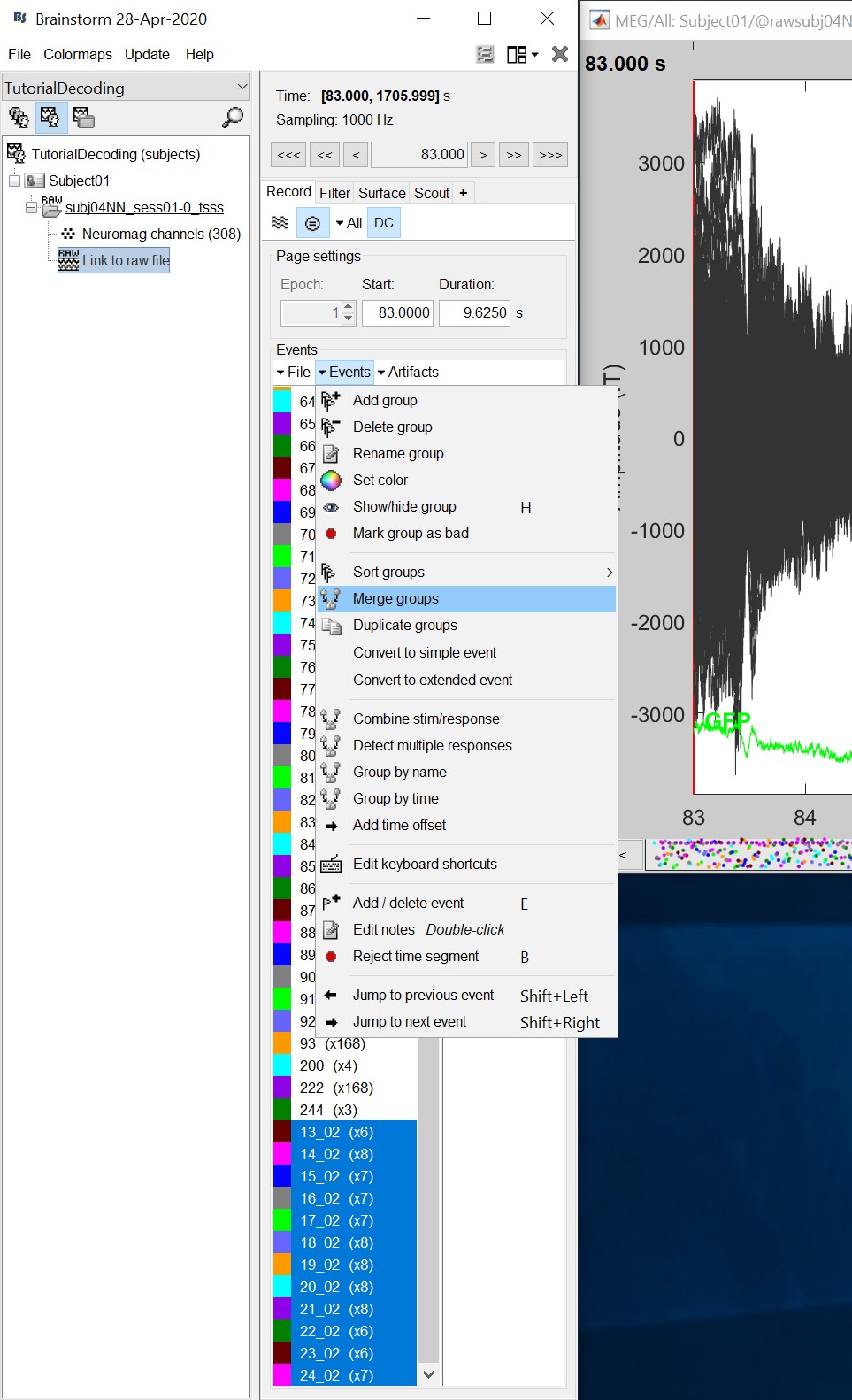

Double click on the 'Link to raw file' to visualize the raw recordings. Event codes 13-24 indicate responses to face images, and we will combine them to a single group called 'faces'. To do so, select events 13-24 using SHIFT + click and from the menu select "Events -> Duplicate groups". Then select "Events -> Merge groups". The event codes are duplicated first so we do not lose the original 13-24 event codes.

Event codes 49-59 indicate responses to object images, and we will combine them to a single group called 'objects'. To do so, select events 49-59 (SHIFT + click) and from the menu select Events -> Duplicate groups. Then select Events -> Merge groups. No screenshots are shown since this is similar to above.

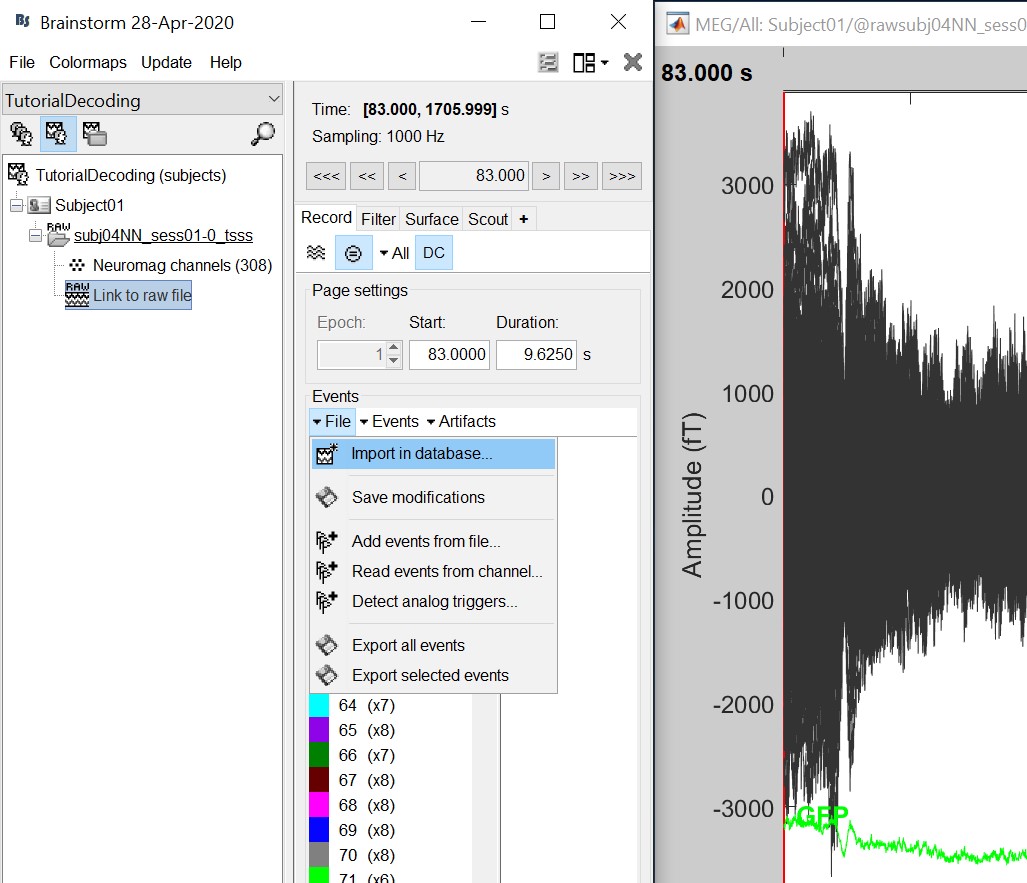

We will now import the 'faces' and 'objects' responses to the database. Select "File -> Import in database".

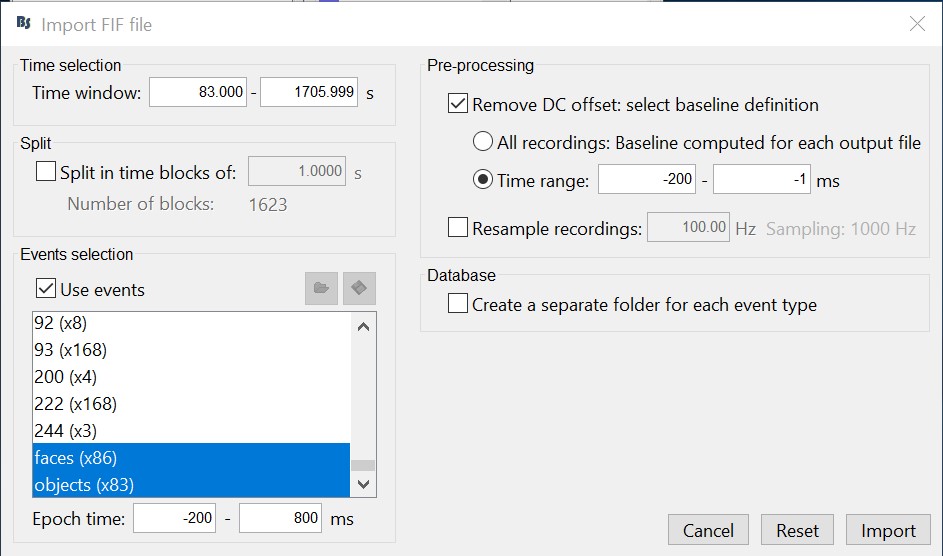

- Select only two events: 'faces' and 'objects'

- Epoch time: [-200, 800] ms

- Remove DC offset: Time range: [-200, 0] ms

Do not create separate folders for each event type

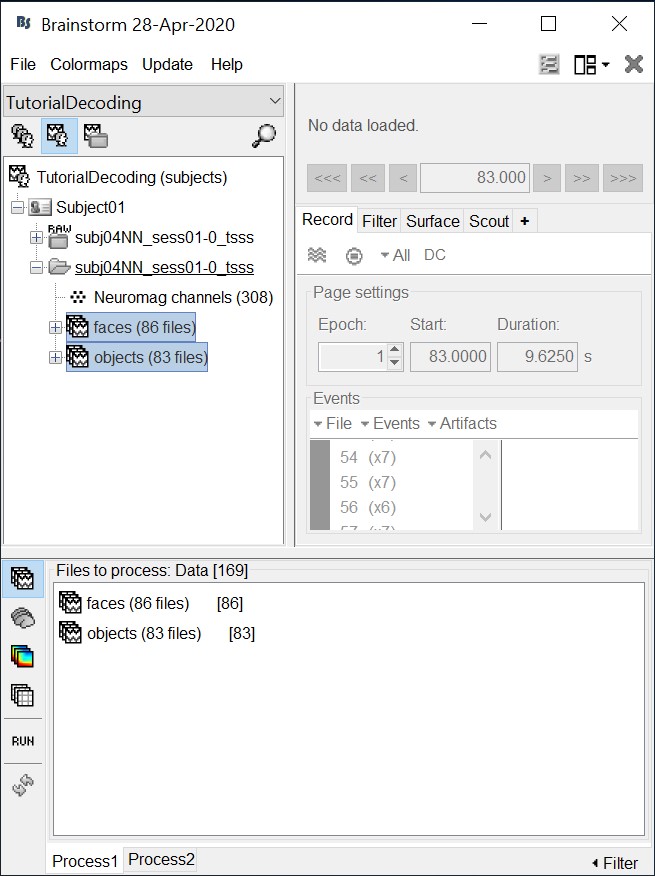

Select files

- Drag and drop all the face and object trials to the Process1 tab at the bottom of the Brainstorm window.

Intuitively, you might have expected to use the Process2 tab to decode faces vs. objects. But the decoding process is designed to also handle pairwise decoding of multiple classes (not just two classes) for computational efficiency, so more that two categories can be entered in the Process1 tab.

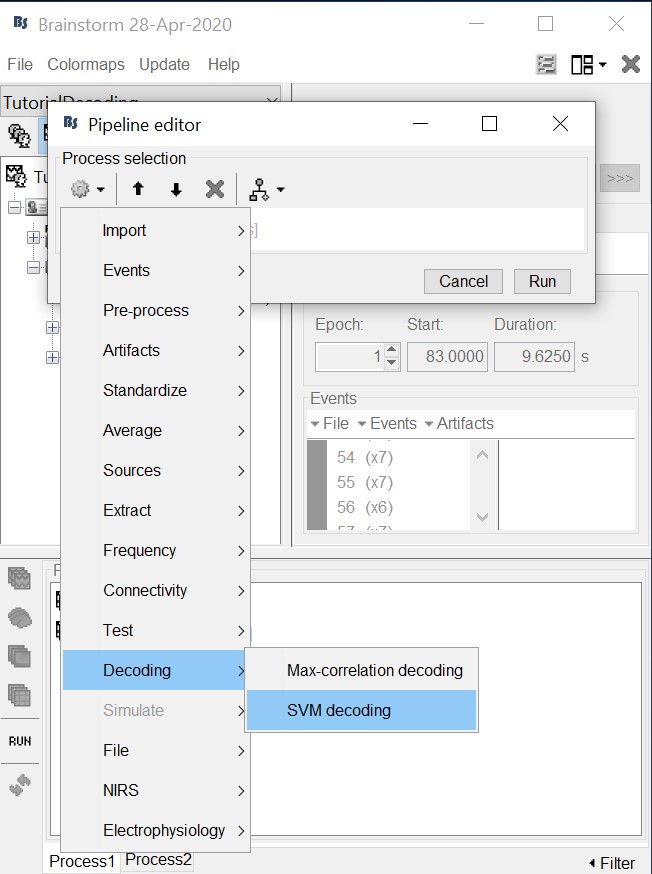

Decoding with cross-validation

Cross-validation is a model validation technique for assessing how the results of our decoding analysis will generalize to an independent data set.

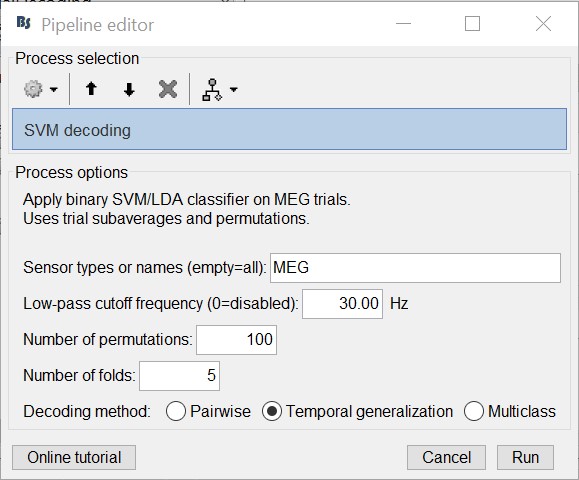

Select process "Decoding > SVM decoding"

Note: the SVM process requires the LibSVM toolbox, see installation instructions above.

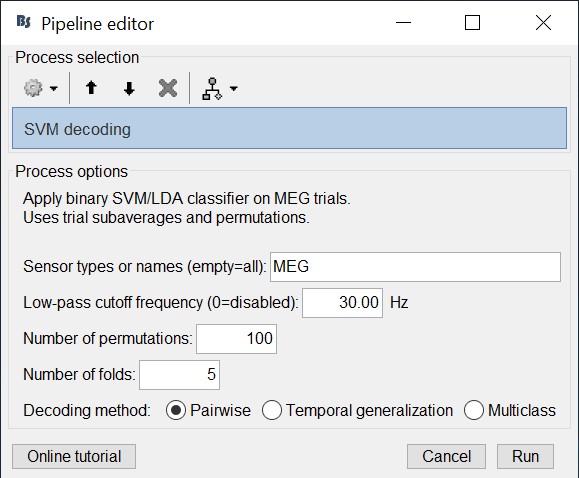

- Select 'MEG' for sensor types

- Set 30 Hz for low-pass cutoff frequency. Equivalently, one could have applied a low-pass filters to the recordings and then run the decoding process. But this is a shortcut to apply a low-pass filter just for decoding without permanently altering the input recordings.

- Select 100 for number of permutations. Alternatively use a smaller number for faster results.

- Select 5 for number of k-folds

- Select 'Pairwise' for decoding. Hint: if more that two classes were input to the Process1 tab, the decoding process will perform decoding separately for each possible pair of classes. It will return them in the same form as Matlab's 'squareform' function (i.e. lower triangular elements in columnwise order)

- The decoding process follows a similar procedure as Pantazis et al. (2018). Namely, to reduce computational load and improve signal-to-noise ratio, we first randomly assign all trials (from each class) into k folds, and then subaverage all trials within each fold into a single trial, thus yielding a total of k subaveraged trials per class. Decoding then follows with a leave-one-out cross-validation procedure on the subavaraged trials.

For example, if we have two classes with 100 trials each, selecting 5 number of folds will randomly assign the 100 trials in 5 folds with 20 trials each. The process than will subaverage the 20 trials yielding 5 subaveraged trials for each class.

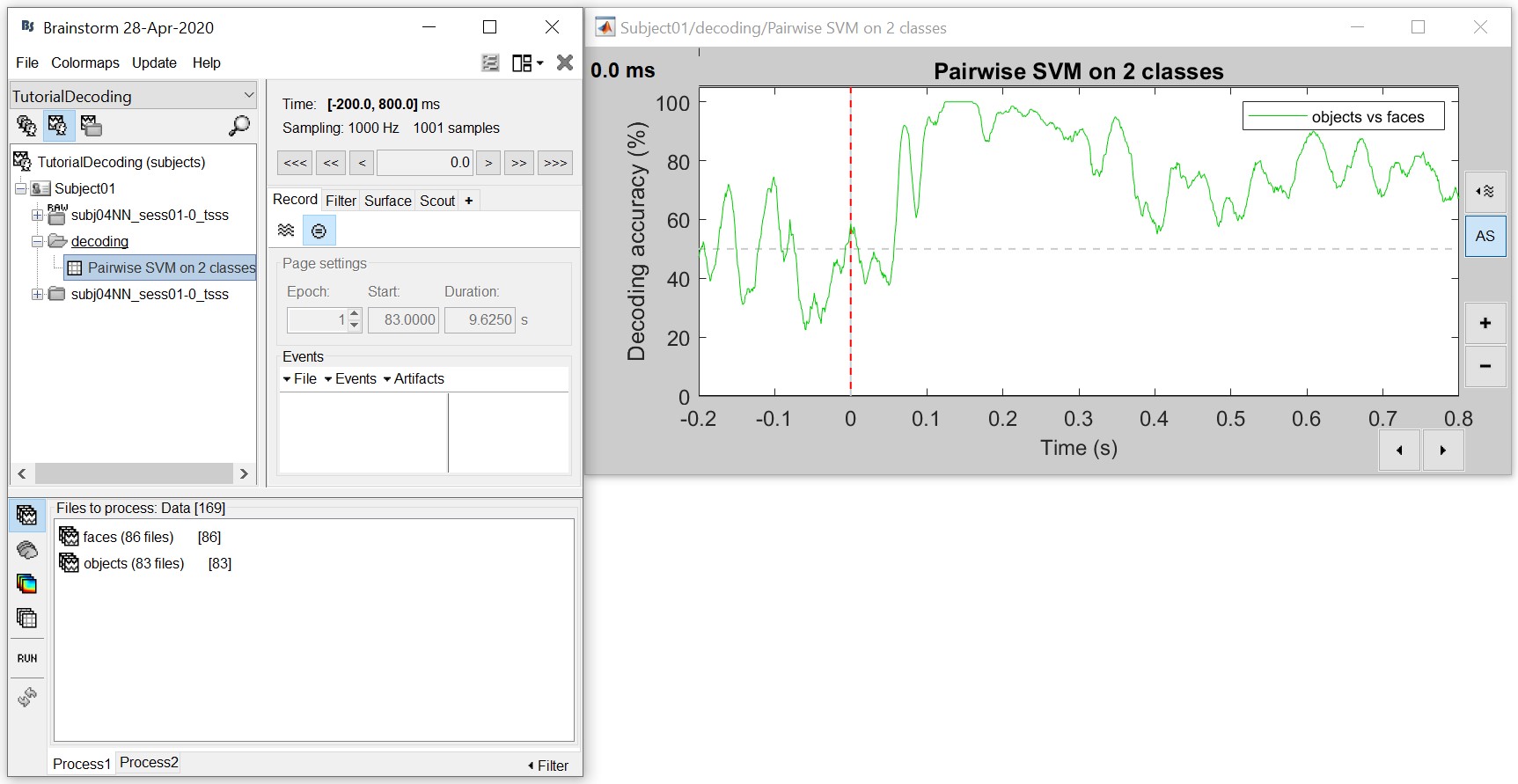

The process will take some time. The results are then saved in a file in the 'decoding' folder

The resulting matrix gives you the decoding accuracy over time. Notice that there is no significant accuracy before about 80 ms, after which there is a very high accuracy (> 90%) between 100 to 300 ms. This tells us that the neural representation of the face and object images vary significantly, and if we wanted to analyse this further we could narrow our analysis at this time period.

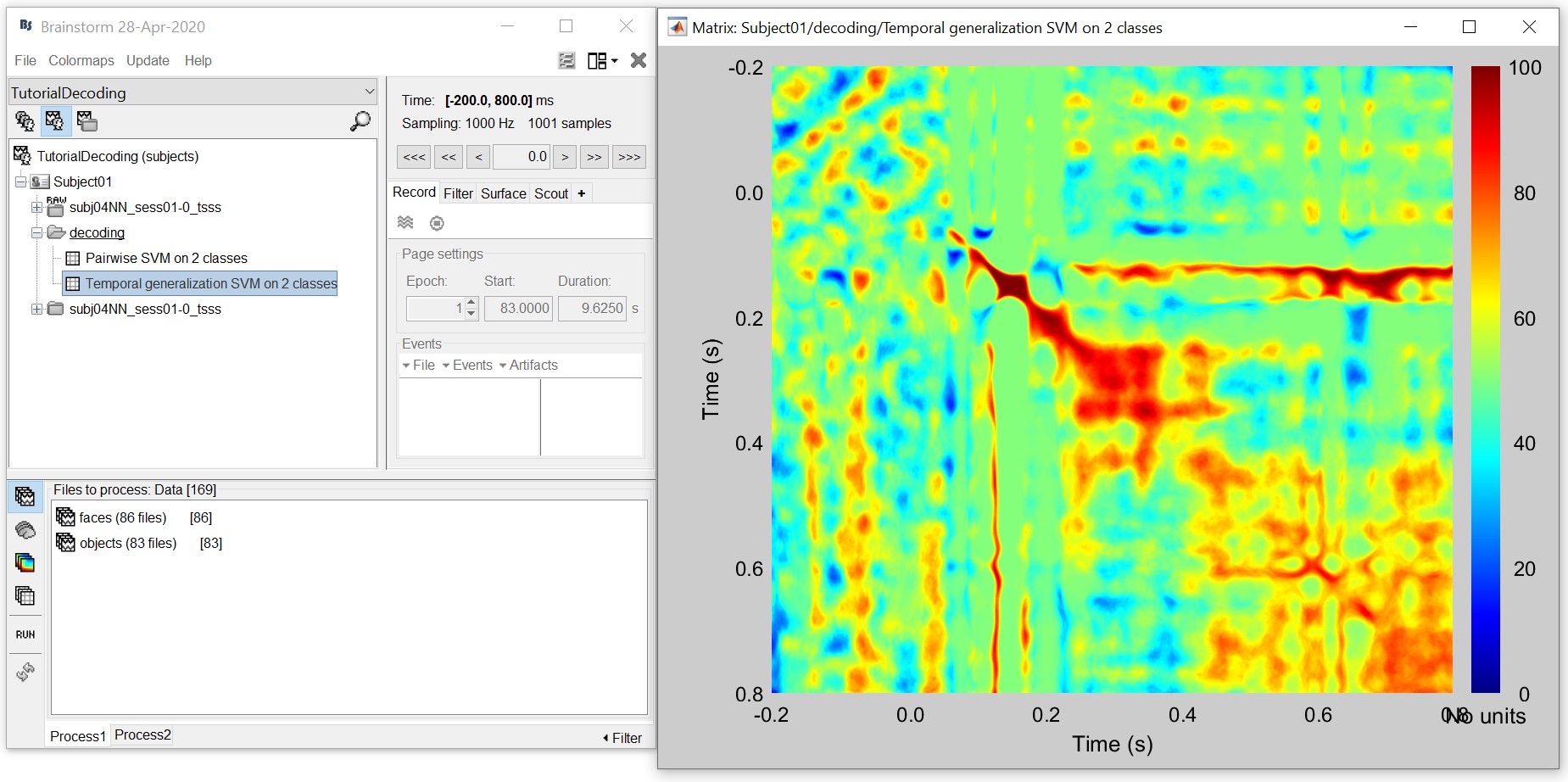

- For temporal generalization, repeat the above process but select 'Temporal Generalization'.

To evaluate the persistence of neural representations over time, the decoding procedure can be generalized across time by training the SVM classifier at a given time point t, as before, but testing across all other time points (Cichy et al., 2014; King and Dehaene, 2014; Isik et al., 2014). Intuitively, if representations are stable over time, the classifier should successfully discriminate signals not only at the trained time t, but also over extended periods of time.

The process will take some time. The results are then saved in a file in the 'decoding' folder

- This representation gives us a decoding (approx. symmetric) matrix highlighting time periods that are similar in neural representation in red. For example, early signals up until 200ms have a narrow diagonal, indicating highly transient visual representations. On the other hand, the extended diagonal (red blob) between 200 and 400 ms indicates a stable neural representation at this time. We notice a similar albeit slightly attenuated phenomenon between 400 and 800 ms. In sum, this visualization can help us identify time periods with transient and sustained neural representations.

References

Cichy RM, Pantazis D, Oliva A (2014), Resolving human object recognition in space and time, Nature Neuroscience, 17:455–462.

Guggenmos M, Sterzer P, Cichy RM (2018), Multivariate pattern analysis for MEG: A comparison of dissimilarity measures, NeuroImage, 173:434-447.

King JR, Dehaene S (2014), Characterizing the dynamics of mental representations: the temporal generalization method, Trends in Cognitive Sciences, 18(4): 203-210

Isik L, Meyers EM, Leibo JZ, Poggio T, The dynamics of invariant object recognition in the human visual system, Journal of Neurophysiology, 111(1): 91-102

Additional documentation

Forum: Decoding in source space: http://neuroimage.usc.edu/forums/showthread.php?2719