|

Size: 2499

Comment:

|

Size: 9847

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| The current tutorial assumes that the tutorials 1 to 5 have been performed. Even if they focus on MEG data, they introduce Brainstorm features that are used in this tutorial. |

= Tutorial: Import and visualize functional NIRS data = ||<40%> || <!> ~+'''This tutorial is under construction'''+~ <!> || ''Authors: Thomas Vincent, Zhengchen Cai'' The current tutorial assumes that the tutorials 1 to 6 have been performed. Even if they focus on MEG data, they introduce Brainstorm features that are required for this tutorial. |

| Line 5: | Line 10: |

| * [[http://neuroimage.usc.edu/brainstorm/Tutorials/CreateProtocol|Create a new protocol]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ImportAnatomy|Import the subject anatomy]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ExploreAnatomy|Display the anatomy]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ChannelFile|Channel file / MEG-MRI coregistration]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ReviewRaw|Review continuous recordings]] |

|

| Line 11: | Line 11: |

| = Download = | * [[http://neuroimage.usc.edu/brainstorm/Tutorials/CreateProtocol|Create a new protocol]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ImportAnatomy|Import the subject anatomy]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ExploreAnatomy|Display the anatomy]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ChannelFile|Channel file / MEG-MRI coregistration]] * [[http://neuroimage.usc.edu/brainstorm/Tutorials/ReviewRaw|Review continuous recordings]] |

| Line 13: | Line 17: |

| The dataset used in this tutorial is available online . * Go to the Download /!\ '''link''' page of this website, and download the file: nirs_sample.zip * Unzip it in a folder that is not in any of the Brainstorm folders |

== Download == The dataset used in this tutorial is available online. |

| Line 17: | Line 20: |

| * Go to the [[http://neuroimage.usc.edu/bst/download.php|Download]] page of this website, and download the file: nirs_sample.zip * Unzip it in a folder that is not in any of the Brainstorm folders |

|

| Line 18: | Line 23: |

| = Presentation of the experiment = * One subject, two acquisition runs of /!\ ''' XXX ''' minutes * Finger tapping task: /!\ ''' XXX ''' stimulation blocks of 30 seconds each, inter-stimulus interval of /!\ ''' XXX ''' seconds * /!\ ''' XXX ''' sources and /!\ ''' XXX ''' detectors arranged in a /!\ ''' XXX ''' montage, with /!\ ''' XXX ''' proximity channels * Two wavelengths: 690nm and 860nm |

== Presentation of the experiment == * Finger tapping task: 10 stimulation blocks of 30 seconds each, with rest periods of ~30 seconds * One subject, one NIRS acquisition run of 12''' '''minutes at 10Hz * 4 sources and 12 detectors (+ 4 proximity channels) placed above the right motor region * Two wavelengths: 690nm and 830nm |

| Line 26: | Line 30: |

| = Create a subject = | == Create the data structure == Create a protocol called "TutorialNIRSTORM": |

| Line 28: | Line 33: |

| = Import anatomy = | * Got to File -> New Protocol * Use the following setting : * '''Default anatomy''': Use individual anatomy. * '''Default channel file''': Use one channel file per subject (EEG). |

| Line 30: | Line 38: |

| import Brainvisa head mesh and MRI file + provide datasets | In term of sensor configuration, NIRS is very similar to EEG and the placement of optodes may change from subject to the other. |

| Line 32: | Line 40: |

| mark fudicials | (!) Should we add (EEG '''or NIRS''') in the interface? |

| Line 34: | Line 42: |

| = Import NIRS functional data = | Create a subject called "Subject01" (Go to File -> New subject), with the default options |

| Line 36: | Line 44: |

| NIRS Acquisition + description | == Import anatomy == === Import MRI and meshes === Make sure you are in the anatomy view of the protocol. |

| Line 38: | Line 48: |

| dataset files: | Right-click on "Subject01 -> Import anatomy folder". Select anatomy folder from the nirs_sample data folder. Reply "yes" when asked to apply the transformation. Leave the number of vertices for the head mesh to the default value. |

| Line 40: | Line 50: |

| * 2 nirs files with proper SrcPos and DetPos fields | This will open the MRI review panel where you have to set the fudicial points (See [[http://neuroimage.usc.edu/brainstorm/Tutorials/ImportAnatomy|Import the subject anatomy]]). |

| Line 42: | Line 52: |

| -> generate these from brainsight output merged with montage coords | Note that the PC, AC and IH points are already defined. |

| Line 44: | Line 54: |

| -> check if there is a way to directly have this from brainsight without having to forge it | {{attachment:NIRSTORM_tut1_MRI_edit_v2.gif||height="335"}} |

| Line 46: | Line 56: |

| * 1 file of digitized coords of fiducials (NAS, LE, RE) as a txt file. Coords should be in the same referential as SrcPos and DetPos | Here are the MRI coordinates (mm) of the fudicials used to produce the above figure: |

| Line 48: | Line 58: |

| = NIRS-MRI coregistration = | * NAS: x:95 y:213 z:114 * LPA: x:31 y:126 z:88 * RPA: x:164 y:128 z:89 * AC: x:96 y:137 z:132 * PC: x:97 y:112 z:132 * IH: x:95 y:103 z:180 |

| Line 50: | Line 65: |

| Use automatic registration | The head and white segmentations provided in the NIRS sample data were computed with Brainvisa and should automatically be imported and processed. |

| Line 52: | Line 67: |

| Keep only step 1 | You can check the registration between the MRI and the loaded meshes by right-clicking on each mesh element and going to "MRI registration -> Check MRI/Surface registration". |

| Line 54: | Line 69: |

| Display Optodes (adapted from “Display Sensors”) | {{attachment:NIRSTORM_tut1_new_MRI_meshes.gif||height="335",width="355"}} |

| Line 56: | Line 71: |

| - no helmet here -> should be able to show optode positions over head meshSKip manual registration | == Import NIRS functional data == The functional data used in this tutorial was produced by the Brainsight acquisition software and is available in the data subfolder of the nirs sample folder. It contains the following files: |

| Line 58: | Line 74: |

| Edit the channel file | * '''fiducials.txt''': the coordinates of the fudicials (nasion, left ear, right ear).<<BR>>The positions of the Nasion, LPA and RPA should have been digitized at the same location as the fiducials previously marked on the anatomical MRI. These points will be used by Brainstorm for the registration, hence the consistency between the digitized and marked fiducials is essential for good results. * '''optodes.txt''': the coordinates of the optodes (sources and detectors), in the same referential as in fiducials.txt. Note: the actual referential is not relevant here, as the registration will be performed by Brainstorm afterwards. * '''S01_Block_FO_LH_Run01.nirs''': the NIRS data in a HOMer-based format /!\ '''document format'''.<<BR>>Note: The fields ''SrcPos'' and ''DetPos'' will be overwritten to match the given coordinates in "optodes.txt" |

| Line 60: | Line 78: |

| Introduce new nomenclature | To import this data set in Brainstorm: |

| Line 62: | Line 80: |

| S1D1WL1 NIRS_WL1 …S1D1WL2 NIRS_WL2 … | * Go to the "functional data" view of the protocol. * Right-click on "Subject01 -> Review raw file" * Select file type "NIRS: Brainsight (.nirs)" * Load the file "S01_Block_FO_LH_Run01.nirs" in the NIRS sample folder.<<BR>>Note: the importation process assumes that the files optodes.txt and fiducials.txt are in the same folder<<BR>>as the .nirs data file. |

| Line 64: | Line 85: |

| = Visualize NIRS signals = | == Registration == In the same way as in the tutorial "[[http://neuroimage.usc.edu/brainstorm/Tutorials/ChannelFile|Channel file / MEG-MRI coregistration]]", the registration between the MRI and the NIRS is first based on three reference points Nasion, Left and Right ears. It can then be refined with the either the full head shape of the subject or with manual adjustment. |

| Line 66: | Line 88: |

| Depends on tut #5 | === Step 1: Fiducials === * The initial registration is based on the three fiducial point that define the Subject Coordinate System (SCS): nasion, left ear, right ear. You have marked these three points in the MRI viewer in the [[http://neuroimage.usc.edu/brainstorm/Tutorials/NIRSDataImport#Import_MRI|previous part]]. * These same three points have also been marked before the acquisition of the NIRS recordings. The person who recorded this subject digitized their positions with a tracking device (here Brainsight). The position of these points are saved in the NIRS datasets (see fiducials.txt). * When we bring the NIRS recordings into the Brainstorm database, we align them on the MRI using these fiducial points: we match the NAS/LPA/RPA points digitized with Brainsight with the ones we placed in the MRI Viewer. * This registration method gives approximate results. It can be good enough in some cases, but not always because of the imprecision of the measures. The tracking system is not always very precise, the points are not always easy to identify on the MRI slides, and the very definition of these points does not offer a millimeter precision. All this combined, it is easy to end with an registration error of 1cm or more. * The quality of the source analysis we will perform later is highly dependent on the quality of the registration between the sensors and the anatomy. If we start with a 1cm error, this error will be propagated everywhere in the analysis. |

| Line 68: | Line 95: |

| Introduce default channel groups: ALL, WL1, WL2 | === Step 2: Head shape === /!\ We don't have digitized head points for this data set. We should skip this |

| Line 70: | Line 98: |

| Just adapt sections “Montage selection” and “Channel selection” | === Step 3: manual adjustment === /!\ TODO? To review this registration, right-click on "NIRS-BRS sensors (104) -> Display sensors -> NIRS" To show the fiducials, which were stored as additional digitized head points: right-click on "NIRS-BRS sensors (104) -> Digitized head points -> View head points" {{attachment:NIRSTORM_tut1_display_sensors_fiducials.gif||height="355"}} /!\ TODO: improve display of optode labels and symbols As reference, the following figures show the position of fiducials [blue] (inion and nose tip are extra positions), sources [orange] and detectors [green] as they were digitized by Brainsight: {{attachment:NIRSTORM_tut1_brainsight_head_mesh_fiducials_1.gif||height="280"}} {{attachment:NIRSTORM_tut1_brainsight_head_mesh_fiducials_2.gif||height="280"}} {{attachment:NIRSTORM_tut1_brainsight_head_mesh_fiducials_3.gif||height="280"}} == Review Channel information == The resulting data organization should be: {{attachment:NIRSTORM_tut1_func_organization.png}} This indicates that the data comes from the Brainsight system (BRS) and comprises 104 channels (96 NIRS channels + 8 auxiliary signals). /!\ TODO: remove useless aux signals To review the content of channels, right-click on "BS channels -> Edit channel file". {{attachment:NIRSTORM_tut1_channel_table.gif||height="250"}} * Channels whose name are in the form SXDYWLZZZ represent NIRS measurements. For a given NIRS channel, its name is composed of the pair Source / Detector and the wavelength value. Column Loc(1) contains the coordinates of the source, Loc(2) the coordinates of the associated detector. * Each NIRS channel is assigned to the group "NIRS_WL690" or "NIRS_WL830" to specified its wavelength. * Channels AUXY in group NIRS_AUX are data read from the nirs.aux structure of the input NIRS data file. It usually contains acquisition triggers (AUX1 here) and stimulation events (AUX2 here). == Visualize NIRS signals == Select "Subject01/S01_Block_FO_LH_Run01/Link to raw file -> NIRS -> Display time series" It will open a new figure with superimposed channels {{attachment:NIRSTORM_tut1_time_series_stacked.png||height="400"}} Which can also be viewed in butterfly mode {{attachment:NIRSTORM_tut1_time_series_butterfly.png||height="400"}} To view the auxiliary data, select "Subject01/S01_Block_FO_LH_Run01/Link to raw file -> NIRS_AUX -> Display time series" {{attachment:NIRSTORM_tut1_time_series_AUX_stacked.gif||height="300"}} We refer to the tutorial for navigating in these views [[Tutorials/ReviewRaw|"Review continuous recordings"]] === Montage selection === /!\ TODO Within the NIRS channel type, the following channel groups are available: * '''All Channels: '''gathers all NIRS channels * '''NIRS_WL685''': contains only the channels corresponding to the 685 nm wavelength * '''NIRS_WL830''': contains only the channels corresponding to the 830nm wavelength /!\ Add screenshot |

Tutorial: Import and visualize functional NIRS data

|

|

Authors: Thomas Vincent, Zhengchen Cai

The current tutorial assumes that the tutorials 1 to 6 have been performed. Even if they focus on MEG data, they introduce Brainstorm features that are required for this tutorial.

List of prerequisites:

Download

The dataset used in this tutorial is available online.

Go to the Download page of this website, and download the file: nirs_sample.zip

- Unzip it in a folder that is not in any of the Brainstorm folders

Presentation of the experiment

- Finger tapping task: 10 stimulation blocks of 30 seconds each, with rest periods of ~30 seconds

One subject, one NIRS acquisition run of 12 minutes at 10Hz

- 4 sources and 12 detectors (+ 4 proximity channels) placed above the right motor region

- Two wavelengths: 690nm and 830nm

MRI anatomy 3T from

scanner type

scanner type

Create the data structure

Create a protocol called "TutorialNIRSTORM":

Got to File -> New Protocol

- Use the following setting :

Default anatomy: Use individual anatomy.

Default channel file: Use one channel file per subject (EEG).

In term of sensor configuration, NIRS is very similar to EEG and the placement of optodes may change from subject to the other.

![]() Should we add (EEG or NIRS) in the interface?

Should we add (EEG or NIRS) in the interface?

Create a subject called "Subject01" (Go to File -> New subject), with the default options

Import anatomy

Import MRI and meshes

Make sure you are in the anatomy view of the protocol.

Right-click on "Subject01 -> Import anatomy folder". Select anatomy folder from the nirs_sample data folder. Reply "yes" when asked to apply the transformation. Leave the number of vertices for the head mesh to the default value.

This will open the MRI review panel where you have to set the fudicial points (See Import the subject anatomy).

Note that the PC, AC and IH points are already defined.

Here are the MRI coordinates (mm) of the fudicials used to produce the above figure:

- NAS: x:95 y:213 z:114

- LPA: x:31 y:126 z:88

- RPA: x:164 y:128 z:89

- AC: x:96 y:137 z:132

- PC: x:97 y:112 z:132

- IH: x:95 y:103 z:180

The head and white segmentations provided in the NIRS sample data were computed with Brainvisa and should automatically be imported and processed.

You can check the registration between the MRI and the loaded meshes by right-clicking on each mesh element and going to "MRI registration -> Check MRI/Surface registration".

Import NIRS functional data

The functional data used in this tutorial was produced by the Brainsight acquisition software and is available in the data subfolder of the nirs sample folder. It contains the following files:

fiducials.txt: the coordinates of the fudicials (nasion, left ear, right ear).

The positions of the Nasion, LPA and RPA should have been digitized at the same location as the fiducials previously marked on the anatomical MRI. These points will be used by Brainstorm for the registration, hence the consistency between the digitized and marked fiducials is essential for good results.optodes.txt: the coordinates of the optodes (sources and detectors), in the same referential as in fiducials.txt. Note: the actual referential is not relevant here, as the registration will be performed by Brainstorm afterwards.

S01_Block_FO_LH_Run01.nirs: the NIRS data in a HOMer-based format

document format.

document format.

Note: The fields SrcPos and DetPos will be overwritten to match the given coordinates in "optodes.txt"

To import this data set in Brainstorm:

- Go to the "functional data" view of the protocol.

Right-click on "Subject01 -> Review raw file"

- Select file type "NIRS: Brainsight (.nirs)"

Load the file "S01_Block_FO_LH_Run01.nirs" in the NIRS sample folder.

Note: the importation process assumes that the files optodes.txt and fiducials.txt are in the same folder

as the .nirs data file.

Registration

In the same way as in the tutorial "Channel file / MEG-MRI coregistration", the registration between the MRI and the NIRS is first based on three reference points Nasion, Left and Right ears. It can then be refined with the either the full head shape of the subject or with manual adjustment.

Step 1: Fiducials

The initial registration is based on the three fiducial point that define the Subject Coordinate System (SCS): nasion, left ear, right ear. You have marked these three points in the MRI viewer in the previous part.

- These same three points have also been marked before the acquisition of the NIRS recordings. The person who recorded this subject digitized their positions with a tracking device (here Brainsight). The position of these points are saved in the NIRS datasets (see fiducials.txt).

- When we bring the NIRS recordings into the Brainstorm database, we align them on the MRI using these fiducial points: we match the NAS/LPA/RPA points digitized with Brainsight with the ones we placed in the MRI Viewer.

- This registration method gives approximate results. It can be good enough in some cases, but not always because of the imprecision of the measures. The tracking system is not always very precise, the points are not always easy to identify on the MRI slides, and the very definition of these points does not offer a millimeter precision. All this combined, it is easy to end with an registration error of 1cm or more.

- The quality of the source analysis we will perform later is highly dependent on the quality of the registration between the sensors and the anatomy. If we start with a 1cm error, this error will be propagated everywhere in the analysis.

Step 2: Head shape

![]() We don't have digitized head points for this data set. We should skip this

We don't have digitized head points for this data set. We should skip this

Step 3: manual adjustment

![]() TODO?

TODO?

To review this registration, right-click on "NIRS-BRS sensors (104) -> Display sensors -> NIRS"

To show the fiducials, which were stored as additional digitized head points: right-click on "NIRS-BRS sensors (104) -> Digitized head points -> View head points"

![]() TODO: improve display of optode labels and symbols

TODO: improve display of optode labels and symbols

As reference, the following figures show the position of fiducials [blue] (inion and nose tip are extra positions), sources [orange] and detectors [green] as they were digitized by Brainsight:

Review Channel information

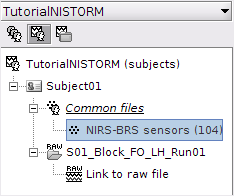

The resulting data organization should be:

This indicates that the data comes from the Brainsight system (BRS) and comprises 104 channels (96 NIRS channels + 8 auxiliary signals).

![]() TODO: remove useless aux signals

TODO: remove useless aux signals

To review the content of channels, right-click on "BS channels -> Edit channel file".

- Channels whose name are in the form SXDYWLZZZ represent NIRS measurements. For a given NIRS channel, its name is composed of the pair Source / Detector and the wavelength value. Column Loc(1) contains the coordinates of the source, Loc(2) the coordinates of the associated detector.

- Each NIRS channel is assigned to the group "NIRS_WL690" or "NIRS_WL830" to specified its wavelength.

- Channels AUXY in group NIRS_AUX are data read from the nirs.aux structure of the input NIRS data file. It usually contains acquisition triggers (AUX1 here) and stimulation events (AUX2 here).

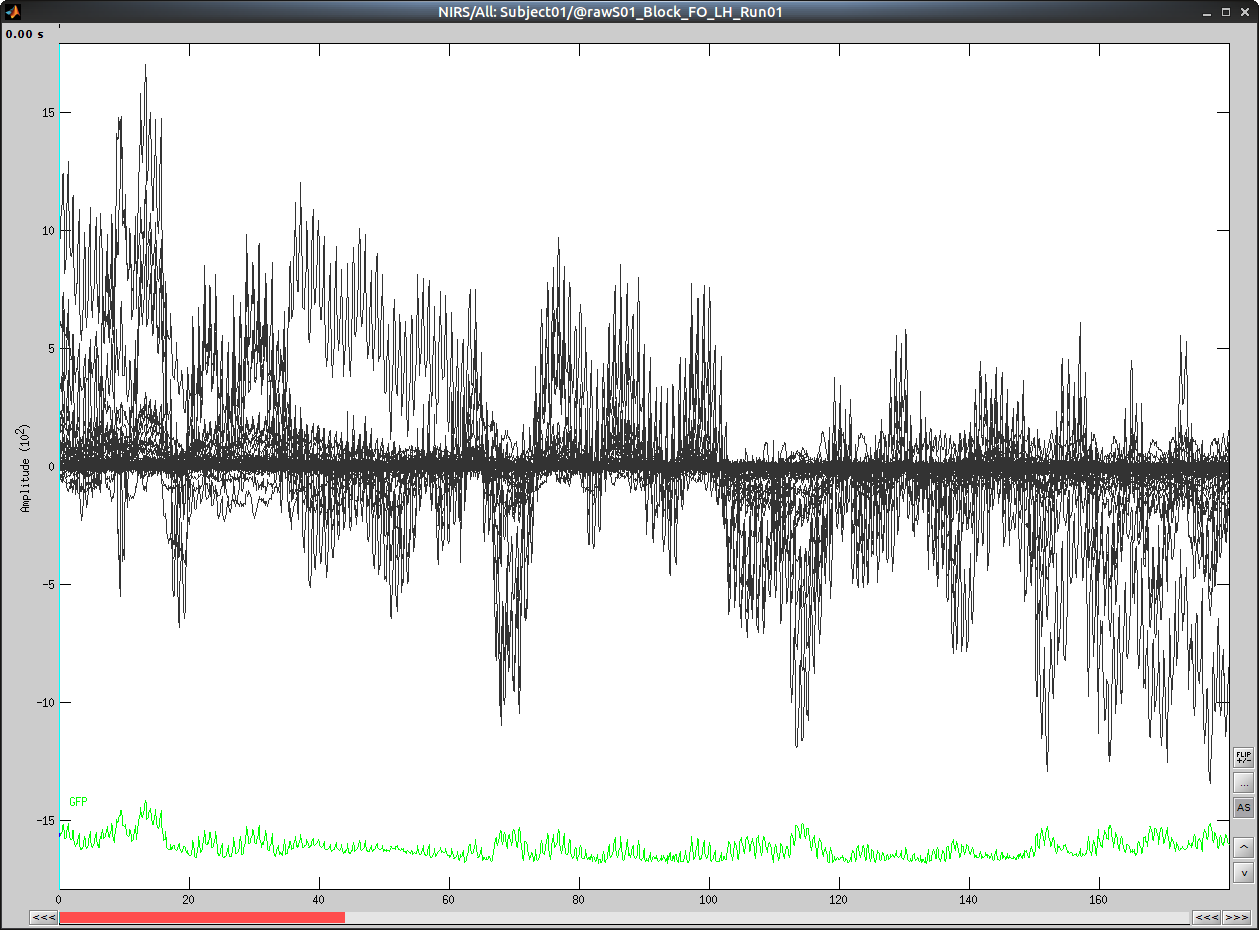

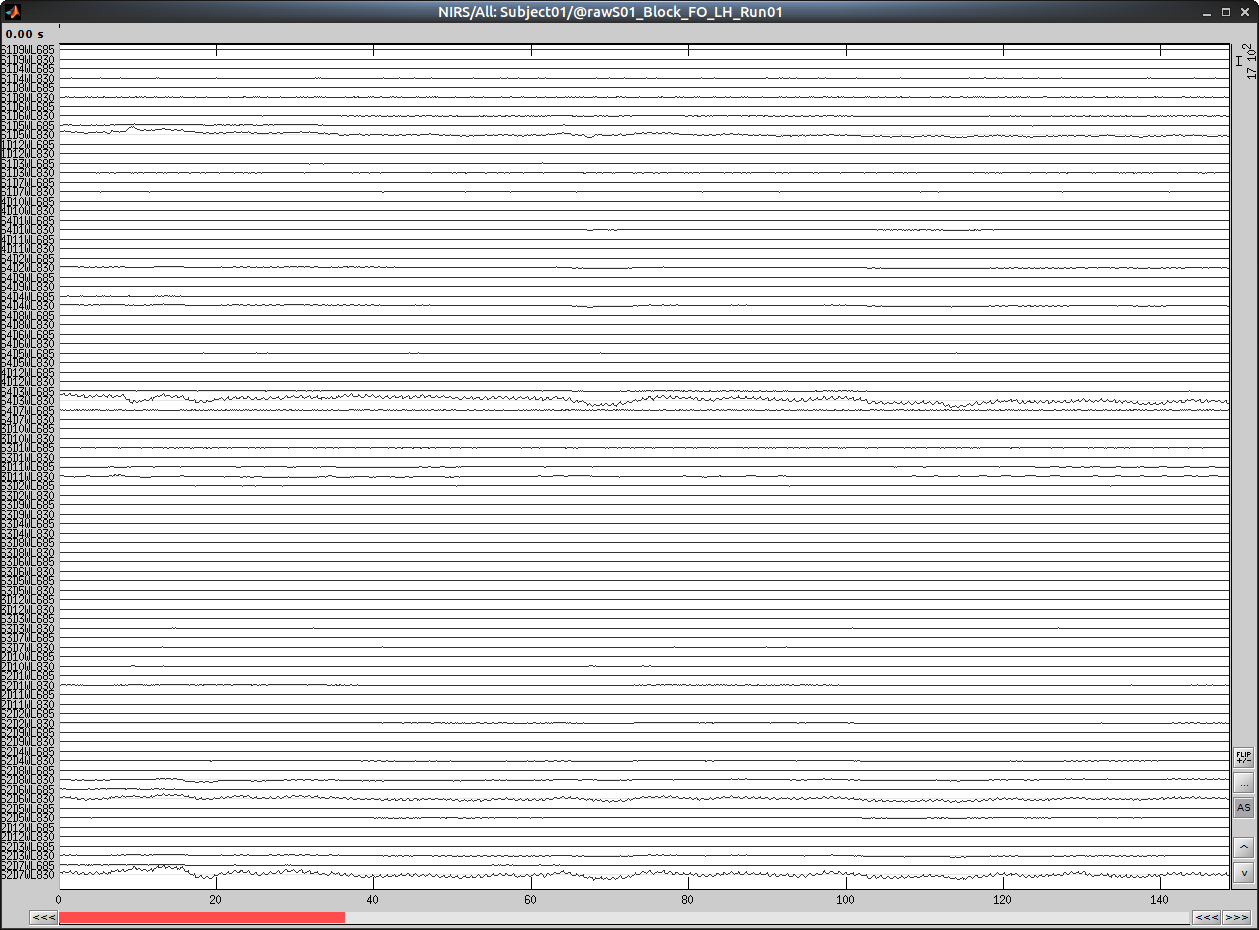

Visualize NIRS signals

Select "Subject01/S01_Block_FO_LH_Run01/Link to raw file -> NIRS -> Display time series"

It will open a new figure with superimposed channels

Which can also be viewed in butterfly mode

To view the auxiliary data, select "Subject01/S01_Block_FO_LH_Run01/Link to raw file -> NIRS_AUX -> Display time series"

We refer to the tutorial for navigating in these views "Review continuous recordings"

Montage selection

![]() TODO

TODO

Within the NIRS channel type, the following channel groups are available:

All Channels: gathers all NIRS channels

NIRS_WL685: contains only the channels corresponding to the 685 nm wavelength

NIRS_WL830: contains only the channels corresponding to the 830nm wavelength

![]() Add screenshot

Add screenshot