Flattening of orientation-unconstrained source models

Author: Marc Lalancette

This section details how Principal Component Analysis (PCA) is used in Brainstorm for dimension reduction, in particular for source models with unconstrained orientations.

Contents

Flattening of source maps using principal component analysis

Further analyses of source models with unconstrained orientations, i.e. with 3 components (x,y,z) at each source location, are often more convenient after a "flat" source is obtained, whereby the source time series are reduced to one single dimension per source location, as with orientation-constrained source maps. Importantly and in many cases, e.g., spectral or connectivity analyses, the phase of the time series must be preserved. Reducing the 3 local dimensions to the norm of the 3 components introduces nonlinear distorsions and must be avoided. A single equivalent source orientation can be estimated with Principal Component Analysis (PCA); the PCA approach yields a resulting summary time series with maximum variance (equivalent to signal power).

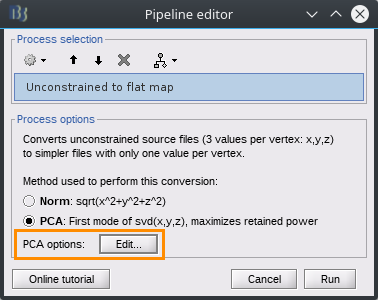

Such flattening can be derived with the process "Sources > Unconstrained to flat map", which saves a flattened copy of source maps (full or kernel) in the Brainstorm data tree, or optionally directly through other processes such as connectivity, where the flat maps are saved to temporary files and are not saved in the tree.

An imporant detail: consistency of the signal polarity of the principal component

The polarity of the PCA component is arbitrary in the sense that it is driven by a mathematical factorisation of the original time series, not by physiology. In June 2023, we have improved the Brainstorm's approach to assign a consistent polarity of the PCA time series to best reflect the original data and, for scouts, the cortex geometry. Brainstorm features 2 options in that purpose as explained in the next section.

Note that this aspect of polarity assignment is independent from the inherent ambiguity on the polarity of MEG/EEG source time series, as explained in the Scout tutorial. This means that even though Brainstorm's processes maximizes the consistency of the polarity of source time series across scouts, trials and other dimensions of a study, the resulting polarity remains ambiguous.

In particular, signal polarity within the same anatomical scout might vary arbitrarily across participants and therefore group analyses may require to pool rectified (polarity-free) versions of source signals, to maximize the detection of effects across participants.

PCA options

PCA is used in various processes within Brainstorm to determine a low-dimensional representation of multiple signals, such as source time series within a scout or sensors in a cluster. The following options apply to all uses of PCA.

PCA works best when processing all files from a subject at once (to avoid inconsistent PCA signal polarity between trails ; see previous section). All the epochs and conditions concerning a participant need to be PCA-processed together at once.

Please note that the respective panels of the processes that embed PCA processes feature an "Edit" button to specify further options specific to the PCA computation:

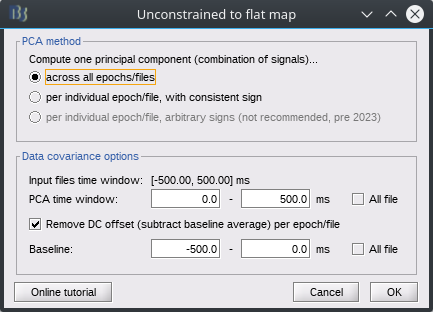

PCA method: affects how the files to be PCA-processed (epochs, conditions) are treated, in with regards to the polarity considerations mentioned above.

across all epochs/files: The PCA reduction is determined at once across all the files provided. This option is recommended by default because the PCA outcomes are simpler to interpret, especially for within subject comparisons, e.g., differences across runs or conditions. Shared kernels can be saved after flattening; the process runs faster with kernel source files.

per individual epoch/file, with consistent sign: A different PCA component is computed for each file, but the polarity is corrected to best align with a reference component, as obtained with the "across epochs" method. This approach can be useful for single-trial analyses, while still allowing combining epochs. This option is slower and saves individual files.

per individual epoch/file, arbitrary signs: This legacy approach was the default PCA process before June 2023. It is no longer recommended because it does not manages the polarity consistency issues mentioned herein. It can still be used for single data files though.

Data covariance options: These options affect only the computation of the principal component coefficients ("loadings"), which are then applied to the unmodified data (without offset removal). These are the same options as when computing the Data Covariance for an adaptive inverse source model. These options are not available for the 3rd PCA option above so that teh PCA outcomes prior to the June 2023 update can be reproduced (a full time window is used both for baseline and data).

Scaling

A PCA time series captures only a fraction of multidimensional activity of the original signals. The amplitude usually scales with the number of signals, e.g., the size of a scout or of a sensor cluster. As of June 2023, Brainstorm rescales the amplitude of scout PCA time series so that it is less dependent on the number of vertices in the scout, and is more comparable to the scale of the scout average.

The PCA component combines all signals with coefficients which vector norm is unitary (i.e. sum of squared coefs = 1). This contrasts with averaging, where each coefficient is 1/N, and their vector norm is sqrt(N * 1/N^2) = sqrt(1/N). Thus scout PCA components are now multiplied by sqrt(1/N).

History

Files resulting from a PCA decomposition store the "% of signal power kept" by the first principal component in the file History, which can be viewed through the tree context menu > File > View file history. (These messages were previously displayed in the command window.)

The file History also stores the list of files used to compute the decomposition for PCA "across all epochs/files".